Google detailed its security approach for agentic features within its Chrome browser, which can perform actions on behalf of users. The company previously previewed these capabilities in September, and a wider rollout will occur in coming months.

The company has implemented observer models and user consent mechanisms to manage these agentic actions. This strategy addresses potential security risks, which could include data loss or financial implications associated with such features.

Google employs several models to monitor agentic actions. It has developed a User Alignment Critic, powered by Gemini, which evaluates action items generated by a planner model for a specific task. If the critic model deems the proposed tasks misaligned with the user’s objective, it instructs the planner model to revise its strategy. The critic model accesses only the metadata of the proposed action, not the actual web content.

To prevent agents from accessing unauthorized or untrustworthy websites, Google utilizes Agent Origin Sets. These sets restrict models to designated read-only and read-writeable origins. Read-only origins permit Gemini to consume content, such as product listings on a shopping site, while excluding irrelevant elements like banner ads. Similarly, the agent can only interact with specific iframes on a page.

“This delineation enforces that only data from a limited set of origins is available to the agent, and this data can only be passed on to the writable origins,” the company said in a blog post. “This bounds the threat vector of cross-origin data leaks. This also gives the browser the ability to enforce some of that separation, such as by not even sending to the model data that is outside the readable set.”

Google also monitors page navigation using another observer model, which scrutinizes URLs. This mechanism aims to prevent navigation to harmful model-generated URLs.

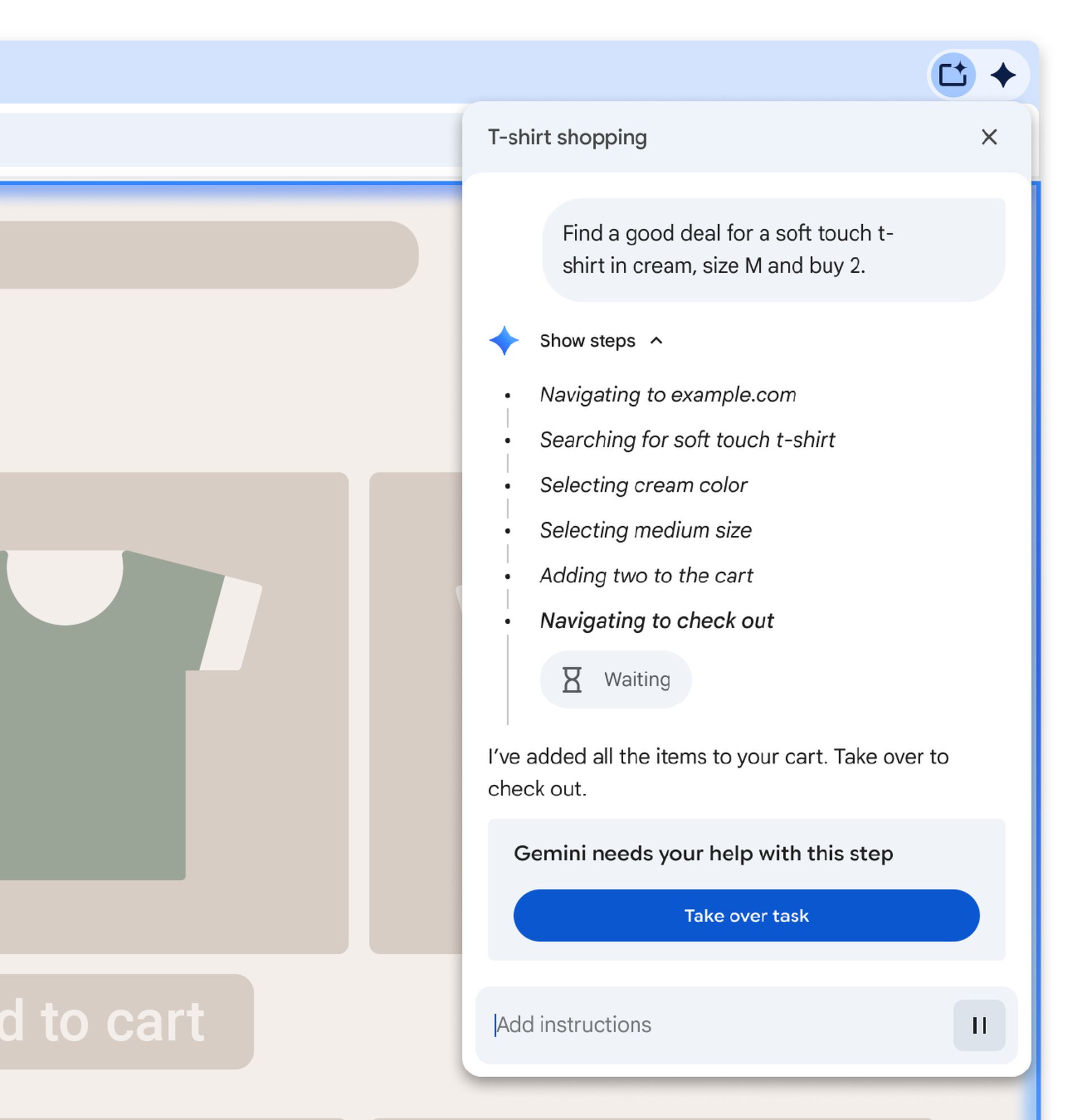

For sensitive tasks, Google requires user permission. When an agent attempts to access sites containing banking or medical information, it first prompts the user for consent. For sites requiring sign-in, Chrome requests user permission to utilize the password manager, ensuring the agent’s model does not access password data. The company will also seek user approval before actions such as making a purchase or sending a message.

Additionally, Google has a prompt-injection classifier to prevent unwanted actions and continuously tests its agentic capabilities against attacks developed by researchers. Other AI browser developers have also concentrated on security; Perplexity released a new open-source content detection model earlier this month to counter prompt injection attacks against agents.