The popularity of Artificial Intelligence (AI) has surged in recent years. Many domains fall under this concept, with its core being large language models (LLMs), which power chatbots, content generation tools, and intelligent software development assistants. Overall, LLMs are highly multifunctional, yet predictable computing methods lie behind these almost magical capabilities.

The LLMs’ mechanisms will be discussed in the article alongside how LLMs process text, why prompt design matters, and how techniques such as retrieval-augmented generation (RAG) perform. The article will be relevant to those who wish to use LLMs more effectively in their work.

How LLMs process text

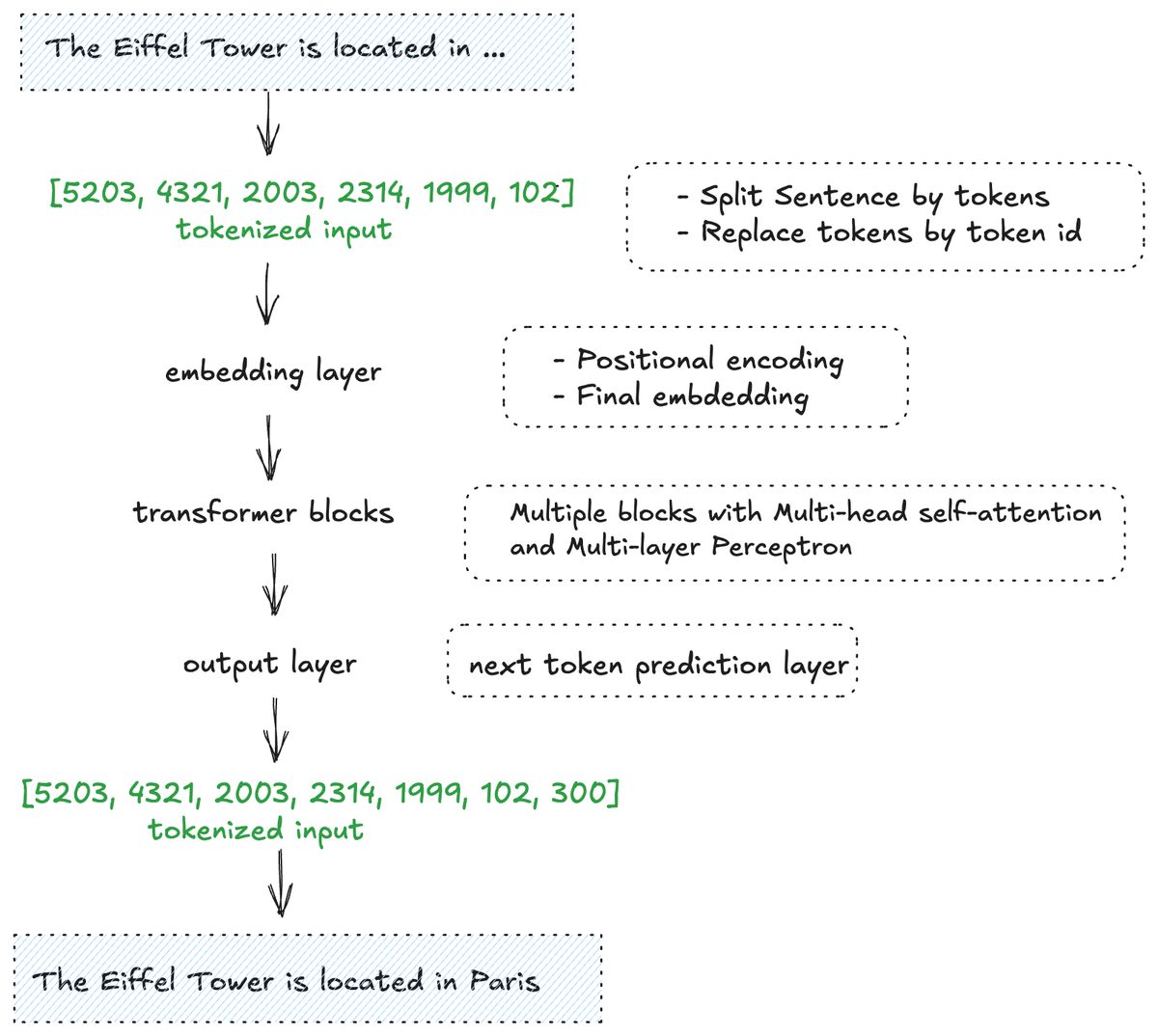

To understand how large language models (LLMs) generate responses, it is important to first look at the journey that text undergoes within the model. This journey can be broken down into several stages. We will start with tokenisation, the process of splitting language into smaller units. Then, we will move on to vector representations, where tokens are transformed into numerical embeddings that capture semantic meaning. Finally, we will touch on how these embeddings are used within the model’s neural network layers, enabling the LLM to process context, learn relationships, and ultimately generate meaningful output.

Tokenisation

The first step in any modern LLM workflow is tokenisation – breaking text, including words, into smaller units. This process aims to represent a human language in a mathematical form that computers can “understand”.

Classical NLP approaches often focused on “cleaning” text by removing punctuation, converting words to lowercase, removing stop words, and normalising words from plural to singular through stemming or lemmatisation. These steps aimed to reduce the vocabulary size and make the text easier for early models to process. For example, the sentence “Running in the park, people stopped” could be processed as:

- Lowercased: [“running”, “in”, “the”, “park”, “people”, “stopped”]

- Without punctuation: same as above

- Without stop-words: [“running”, “park”, “people”, “stopped”]

- Stemmed: [“run”, “park”, “person”, “stop”]

- Lemmatised: [“run”, “park”, “person”, “stop”]

While this approach simplified processing, it came with significant drawbacks: removing punctuation and normalising words often stripped away important context, making it harder for models to understand language nuances.

Modern LLMs solve these issues by using subword tokenisation. Instead of analysing words in isolation, text is split into smaller units – subword tokens – while keeping as much context and meaning as possible. Analysing words one by one in their original form is computationally challenging, as even modern GPUs struggle with large vocabularies. Subword tokenisation solves this by allowing models to handle rare words, different inflections, and morphologically rich languages efficiently. There are multiple techniques to achieve this, and each model applies its own variant. One of the examples is below:

>”Running in the park, people stopped.”

>>[“Run”, “##ning”, “in”, “the”, “park”, “,”, “people”, “stop”, “##ped”, “.”]

This approach preserves grammatical and semantic information while keeping vocabulary size manageable, which is necessary for modern LLM performance.

Bag of Words and embeddings

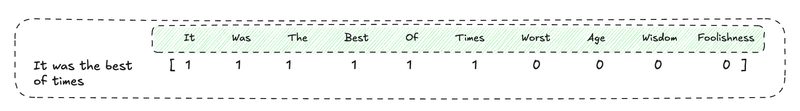

As the next step, the text should be represented from the characters into mathematical form through vectorisation. An example of how it is possible to represent vectors from the text can be the Bag of Words. Bag of Words is a naive approach to considering what might be a vector representation for a sentence in a limited-size vocabulary.

Let us take an example [kaggle link]: we have a language corpus (small vocabulary) which includes only the words as in the poem below:

“It was the best of times,

It was the worst of times,

It was the age of wisdom,

It was the age of foolishness”

For instance, based on this corpus, we want to create a vector representing just the first sentence. The most straightforward approach is to count all possible words in the corpus and use them as features (10 words → 10-dimensional vector: It, Was, The, Best, Of, Times, Worst, Age, Wisdom, Foolishness). Then, for a given sentence, we count the occurrences of each word and assign these counts to the corresponding positions in the vector. This is the classic Bag of Words approach. While it is a simple and illustrative method, it has limitations: it does not account for word order, meaning, or semantic relationships between words.

This is where embeddings come into play. Unlike Bag of Words, embeddings map words or tokens into continuous vectors of real numbers, capturing richer information about their meaning. Once words are represented in this vector space, distances such as cosine similarity can be used to find relationships between them. In other words, embeddings allow models to measure and mathematically use the relationships between words.

Word embedding techniques have progressed over the years. Traditional approaches, such as TF-IDF and co-occurrence matrices, were dependent on word frequency and could not represent underlying semantic relationships. The second leap came with static word embeddings like Word2Vec, GloVe, and Fast Text, which mapped words into vectors. This tool helped models measure similarities between words more accurately; however, these representations were insensitive to context. Nowadays, contextualised embeddings, such as DeepSeek, LLaMA, and GPT-4, dominate. They signify a revolutionary change in LLMs by the ability to generate dynamic word representations that take into account the surrounding context. For further information, refer to the article elaborating on the topic of LLM embeddings.

During training, vectors learn to recognise semantically-related tokens like “cat”, “kitty”, and “animal”. Words “no”, “not”, and “never” could form another cluster. After training, the embedding matrix is frozen: when using ChatGPT or Claude, tokens are mapped to these pretrained vectors. Each model has its own embedding matrix. From these vectors, the input sentence forms a matrix representation that the neural network processes layer by layer.

We have discussed how text is converted into a matrix representation that a machine (CPU/GPU) can process. This matrix then becomes an input for the neural network, where each layer performs matrix operations to find more complex patterns and connections in the data. There are many types of operations within neural networks, yet we will focus on the most interesting one – attention.

Attention

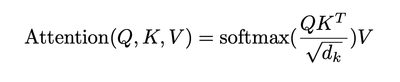

Attention is a layer inside a neural network. It takes embeddings and converts them into sets of vectors – Q (Queries), K (Keys), and V (Values). Each Query is compared with all Keys to decide which tokens to focus on, and after that, the model collects the corresponding Values. As a result, by stacking multiple layers, the network can build more nuanced contextual representations of the input. This mechanism, introduced in Vaswani et al.’s paper Attention Is All You Need (2017), has since become the foundation of modern transformers.

Attention weights are computed with this formula:

As a result of attention, each token receives a contextualised vector/matrix, refined with information from all the other tokens it attends to.

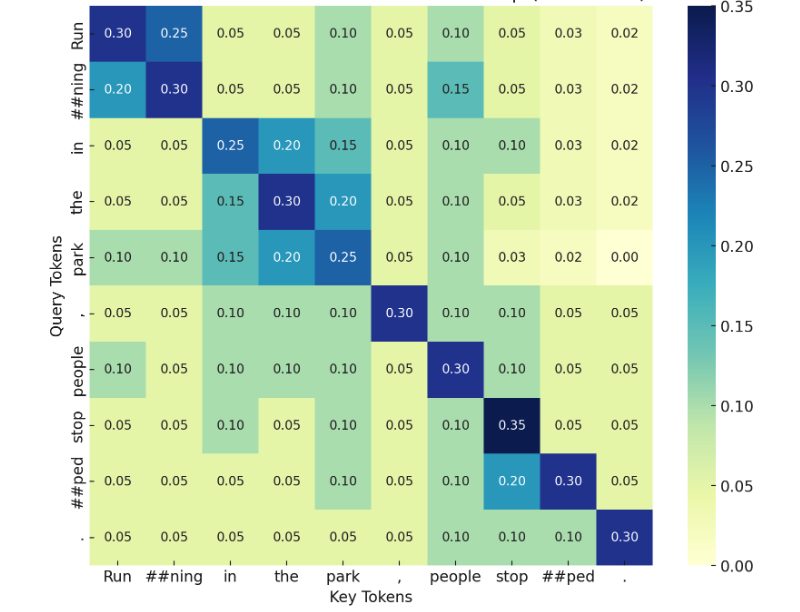

Transformers use attention to interpret meaning. Returning to the sentence “Running in the park, people stopped“, the word “stopped” looks at:

“people” → who stopped,

“in the park” → stopped where.

Attention guarantees that the context considers the entire sentence, regardless of how far apart the words are. Each attention layer applies this repeatedly and refines the context until the final output layer predicts the next token.

One of the examples of how attention can be presented is a heatmap, where dark colours indicate stronger focus. For the token “stop”, the most relevant links are to “people” and “in”,” park”, indicating that the model understands who stopped and where. Modern LLMs contain many stacked attention layers, and this example is a somewhat simplified one.

After attention, the vectors are processed by feedforward neural networks (multi-layer perceptrons). These layers transform and refine the contextual embeddings, enrich them with nonlinear patterns, and strengthen semantic relationships between tokens.

Prediction iterations

After embedding and attention, the model passes the sequence through multiple transformer layers and produces a probabilistic model of the vocabulary. The most probable token is selected, appended to the sequence, and the process repeats with the updated input. In this way, “Running in the park, people stopped” may extend to “suddenly” or “because“, each time recomputing the full context to predict the new word. This autoregressive loop continues until the model reaches an end-of-sequence marker or another stopping condition.

Prompt engineering: asking the right questions

Prompts and messages are the interface between users and LLMs. APIs may expose different formats, but for the model itself, everything is ultimately reduced to plain text.

Legacy: Text Completion (GPT-3 style) accepts a raw string:

{

“prompt”: “user: Hi. Where is the Eiffel Tower located?”,

“max_tokens”: 100

}

Modern: Chat Completion (GPT-4, Claude) wraps input into structured JSON:

{

“messages”: [

{

“role”: “system“,

“content”: “I am a helpful assistant. How may I help?”

},

{

“role”: “user“,

“content”: “Hi. Where is the Eiffel Tower located?”

}

]

}

But underneath, these structures are simply concatenated into one text string with role tags:

<|system|>

I am a helpful assistant. How may I help?

<|user|>

Hi. Where is the Eiffel Tower located?

<|system|>

The transformer does not recognise JSON and only processes tokenised text. Its task is the same: to continue the sequence from the assistant’s perspective. It is important to understand this because the model treats all inputs as plain text, which directly affects prompting techniques. This means that consistent formatting, clear role markers, and well-chosen delimiters become critical for guiding the model’s behaviour, as will be discussed later in the article.

Principles of effective prompting

This part will help you compile effective prompts and elicit the most precise output from LLMs. There are several tips to achieve this:

Be clear and accurate when writing the prompt

Imagine describing a new topic to someone unfamiliar with the context, style, or preferred way of working. You need to be as precise when writing a prompt to LLM as you can. For example, you can include:

- The results you need.

- The context.

- An example of a successful task.

Another tip is to clarify precisely what you want the LLM to do. If you need a code, ask the model for it. It would also be great if you could provide a bullet list of steps you want the LLM to perform.

Your prompt for LLM can look like this: Anonymise customer feedback for the quarterly report. Remove all personally identifiable information from: {{FEEDBACK_DATA}}

Instructions:

● Replace names with CUSTOMER_[ID]

● Replace emails with EMAIL_[ID]@example.com

● Replace phone numbers with PHONE_[ID]

● Keep product names (for example, AcmeCloud) unchanged

● If no personal data is present, copy the text as is

Use examples (multi-shot prompting)

The LLM’s responses will be more precise if you provide it with an example of a good one. Ensure your examples accurately reflect actual case usage, cover potential challenges, and are wrapped in the <example> tags.

Your prompt for LLM can look like this: Analyse this customer review and classify the issues into the following categories: UI/UX, Performance, Feature Request, Integration, Pricing, or Other.

Also evaluate:

- Sentiment: Positive / Neutral / Negative

- Priority: High / Medium / Low

Here is an example:

<example>

Input: The new dashboard is very messy. I can’t load it and find the export button. You need to fix it!

Category: UI/UX, Performance

Sentiment: Negative

Priority: High</example>

Now, analyse this feedback: {{FEEDBACK}}

Apply Chain-of-Thought reasoning

When working with complex tasks, LLMs can significantly benefit from thinking step-by-step – a technique also referred to as chain of thought prompting. It is advantageous for math, logic, and analysis prompts. However, keep in mind that not every task requires in-depth analysis (basic calculations, for example) and use it judiciously.

Your prompt for LLM can look like this: Create a personalised quarterly performance report for department heads.

Inputs:

● Program details: {{PROGRAM_DETAILS}}

● Head details: {{HEAD_DETAILS}}

Instructions: Think step by step before writing.

- Identify what information would be most valuable for this manager, considering their history and department priorities.

- Highlight program aspects that matter most now (KPIs, efficiency growth, bottlenecks).

- Based on this reasoning, generate the final personalised report.

Provide XML-style structured input

XML tags can be very beneficial if your prompt has multiple elements, such as context, instructions, and examples. Tags help LLMs parse your prompts more precisely and, hence, produce responses of better quality.

Here are some tips for the most efficient uses of XML tags:

- Use the same tag names consistently across your prompts and refer to them when discussing the content (for instance, use <example> tags for the examples).

- Use nest tags <outer><inner></inner></outer> for hierarchical content.

Your prompt for LLM can look like this: You are a financial analyst at AcmeCorp. Prepare the Q2 financial report for our investors. AcmeCorp is a B2B SaaS company. Our investors value transparency and practical recommendations.

Use this data for the report:

<data>{{SPREADSHEET_DATA}}</data>

<instructions> 1. Include sections: Revenue Growth, Profitability, Cash Flow. 2. Highlight strengths and areas for improvement. </instructions>

Make the tone of the report concise and professional. Follow this structure:

<formatting_example>{{Q1_REPORT}}</formatting_example>

Assign roles

You can improve the LLM’s performance by assigning it a specific role. Thanks to using this technique, your prompts will align with the required communication style or level of detail (as if they were written by a copywriter, for example) and stay more within the bounds of your task’s specific requirements.

You can also experiment with the roles and twist them according to what result you would need. For instance, a data scientist will likely obtain different results from a product manager, even given the same input. Remember that it is a vector space and probabilities, so the role you assign in the prompt might move the probabilities in a slightly different direction.

Your prompt for LLM can look like this: Analyse this software license agreement for potential risks:

<contract>

{{CONTRACT}}

</contract>

Focus on clauses about indemnification, liability, and ownership of intellectual property rights.

Role: You are a corporate lawyer specialising in IT law, software licensing, and legal risk management.

Task: Identify possible legal risks in the agreement and explain them to the client clearly, concisely, and professionally (for example, a startup purchasing or licensing software from a third-party developer).

Prioritise the input. When working with large documents (over 20,000 tokens), place them at the beginning of the prompt and structure them with tags and metadata. Finally, treat iteration as an opportunity to improve LLMs’ outputs.

Retrieval-Augmented Generation (RAG) and Fine-Tuning

Prompting is key, and structuring a good prompt can greatly improve query results. But are there other ways an LLM can be adjusted for a specific task? Users may also encounter token limits in their queries. This is where RAG and fine-tuning techniques come into play. Both approaches aim to enhance model performance, and each will be discussed in more detail.

RAG

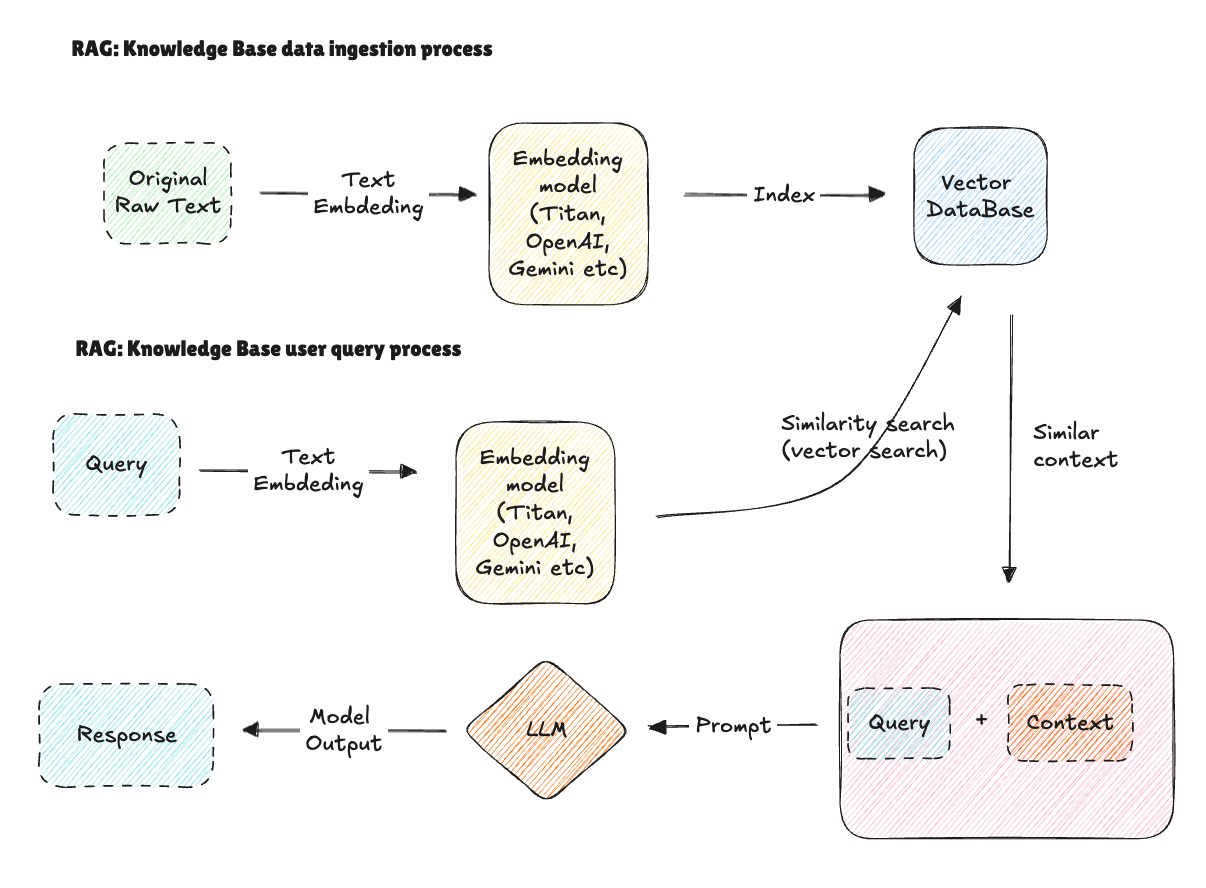

RAG supplements the LLM model with a vector database of documents. Relevant passages are retrieved and added to the context for each query accordingly.

RAG is beneficial for quick updates and fast-changing information. However, it is essential to keep in mind three factors that affect its effectiveness: the quality of documents in the knowledge base (insufficient data, insufficient data out), the chunking strategy used for similarity search, and the indexing approach.

Fine-tuning

Fine-tuning retrains the base model with domain-specific data. Its main advantage is stable knowledge domains, whereas it requires an expensive GPU and weeks of preparation. To summarise, fine-tuning is stable but costly, while RAG is flexible and efficient.

Where LLMs struggle

Despite the magical capabilities of LLMs, there is still room for improvement. For example, the models cannot yet perform multi-step operations perfectly, such as “Predict demand for tickets X, and check if it differs from the historical average of the last three weeks”. LLMs also struggle with complex retrieval like “List the ten largest suppliers in a chemical industry sector in a specific district for 2024”. Without structured databases, answers may be inaccurate.

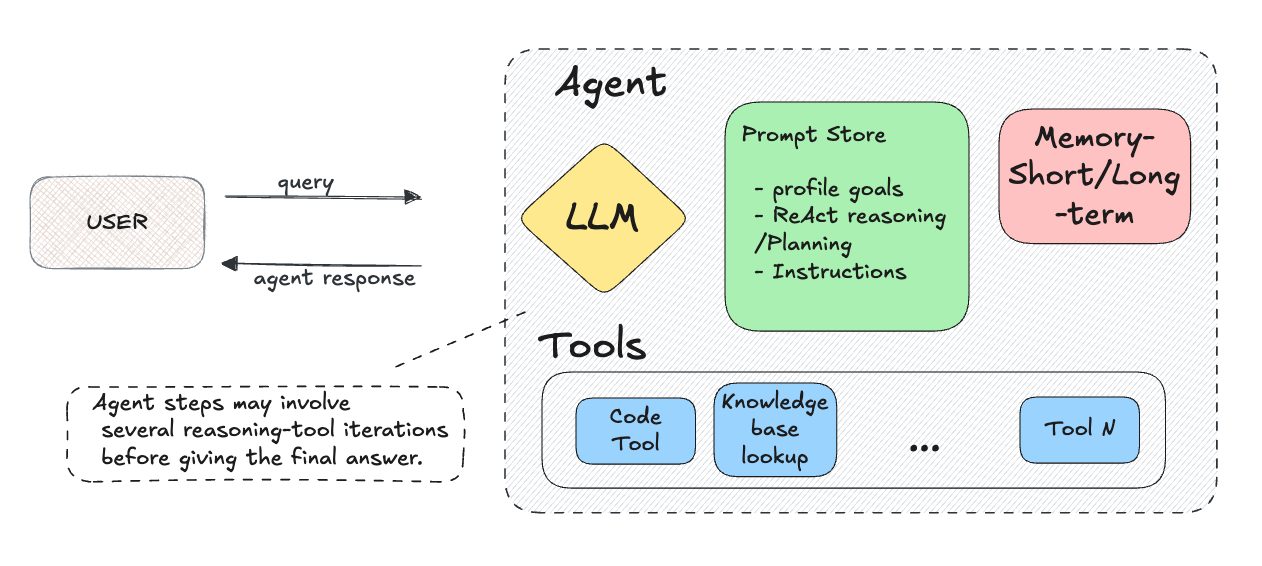

AI agents

AI agents extend the capabilities of LLMs. It can be thought of as a scaled-up version of a standard LLM, enhanced with reasoning, memory, and tool access. At its core, the agent often uses the same underlying LLM as a “brain”, but it applies advanced prompt techniques and internal memory to structure reasoning into multi-step chains of thought. This allows the agent to break down complex tasks into smaller, manageable steps, plan execution, and iteratively refine its outputs.

Additionally, AI agents can interface with external tools or systems to perform specific actions, such as querying databases, executing calculations, or retrieving updated information, which then feed back into the chain of thought. By combining autonomous reasoning, memory, and tool usage, agents enable LLMs to move beyond simple text generation and actively solve complex, real-world problems.

Key takeaways

- LLMs create meaning through structure, such as tokenisation, embeddings, attention, and iteration.

- Prompts matter: clarity, examples, tagging, and role assignment can significantly improve LLMs’ outputs.

- Enhancement paths diverge: fine-tuning suits stable domains, whereas RAG is best for dynamic ones.

- Agents go beyond traditional LLM: they perform planning, execution, and validation.

In conclusion, LLMs are not magic: they are computational systems where context and accurate queries define results. Understanding how they process text, why prompts shape their behaviour, and what techniques like RAG or fine-tuning add to the picture is essential for using them effectively. As these models continue to evolve, the key to harnessing their full potential lies not only in the underlying algorithms but also in how humans interact with them through thoughtful design, clear goals, and continuous experimentation.