In machine learning, few ideas have managed to unify complexity the way the periodic table once did for chemistry. Now, researchers from MIT, Microsoft, and Google are attempting to do just that with I-Con, or Information Contrastive Learning. The idea is deceptively simple: represent most machine learning algorithms—classification, regression, clustering, and even large language models—as special cases of one general principle: learning the relationships between data points.

Just like chemical elements fall into predictable groups, the researchers claim that machine learning algorithms also form a pattern. By mapping those patterns, I-Con doesn’t just clarify old methods. It predicts new ones. One such prediction? A state-of-the-art image classification algorithm requiring zero human labels.

Imagine a ballroom dinner. Each guest (data point) finds a seat (cluster) ideally near friends (similar data). Some friends sit together, others spread across tables. This metaphor, called the clustering gala, captures how I-Con treats clustering: optimizing how closely data points group based on inherent relationships. It’s not just about who’s next to whom, but what types of bonds matter; be it visual similarity, shared class labels, or graph connections.

This ballroom analogy extends to all of machine learning. The I-Con framework shows that algorithms differ mainly in how they define those relationships. Change the guest list or seating logic, and you get dimensionality reduction, self-supervised learning, or spectral clustering. It all boils down to preserving certain relationships while simplifying others.

The architecture behind I-Con

At its core, I-Con is built on an information-theoretic foundation. The objective: minimize the difference (KL divergence) between a target distribution, what the algorithm thinks relationships should be, and a learned distribution, the actual model output. Formally, this is written as:

L(θ, ϕ) = ∑ DKL(pθ(·|i) || qϕ(·|i))

Different learning techniques arise from how the two distributions, pθ and qϕ, are constructed. When pθ groups images by visual closeness and qϕ groups them by label similarity, the result is supervised classification. When pθ relies on graph structure, and qϕ approximates it through clusters, we get spectral clustering. Even language modeling fits in, treating token co-occurrence as a relationship to be preserved.

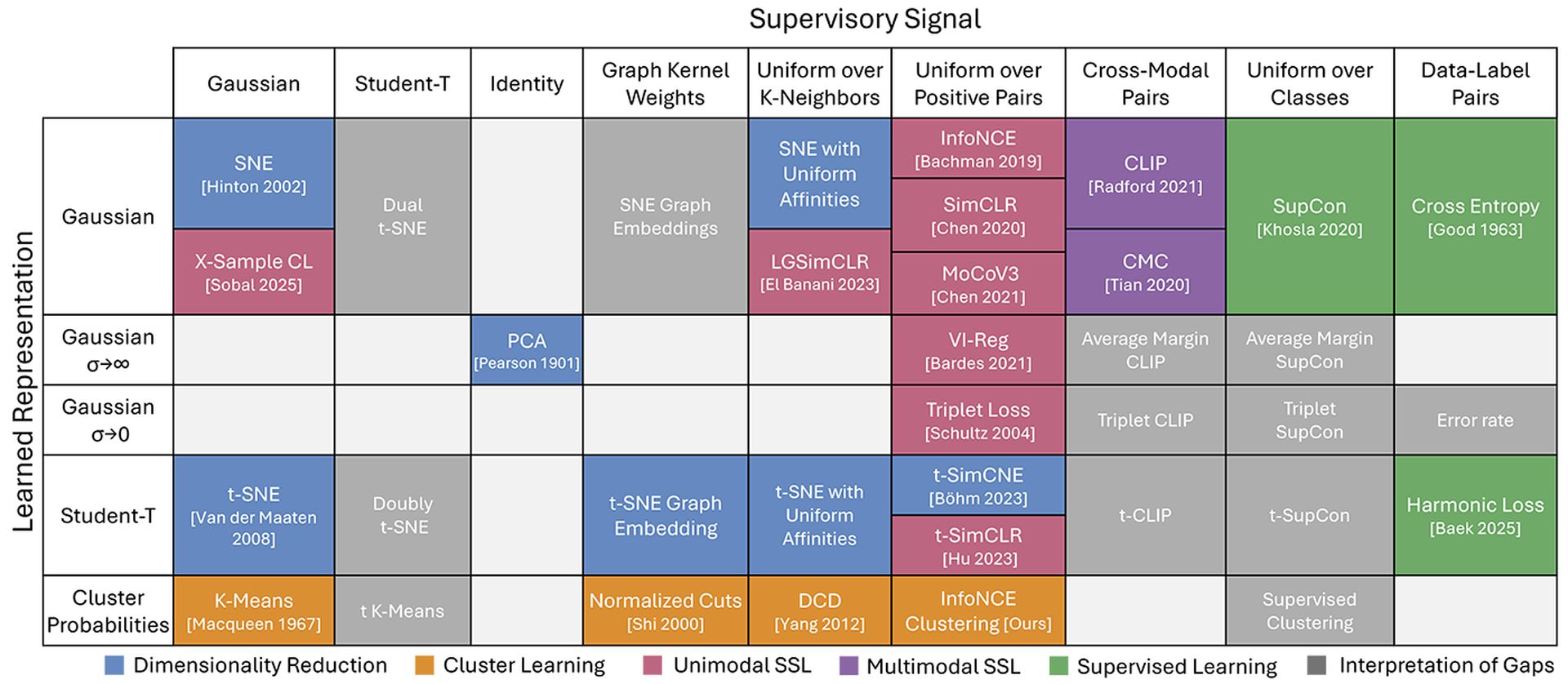

The table that organizes everything

Inspired by chemistry’s periodic table, the I-Con team built a grid categorizing algorithms based on their connection types. Each square in the table represents a unique way data points relate in the input versus output space. Once all known techniques were placed, surprising gaps remained. These gaps didn’t point to missing data—they hinted at methods that could exist but hadn’t been discovered yet.

To test this, the researchers filled in one such gap by combining clustering with debiased contrastive learning. The result: a new method that outperformed existing unsupervised image classifiers on ImageNet by 8%. It worked by injecting a small amount of noise—“universal friendship” among data points—that made the clustering process more stable and less biased toward overconfident assignments.

Debiasing plays a central role in this discovery. Traditional contrastive learning penalizes dissimilar samples too harshly, even when those samples might not be truly unrelated. I-Con introduces a better approach: mixing in a uniform distribution that softens overly rigid assumptions about data separations. It’s a conceptually clean tweak with measurable gains in performance.

Another method involves expanding the definition of neighborhood itself. Instead of looking only at direct nearest neighbors, I-Con propagates through the neighborhood graph—taking “walks” to capture more global structure. These walks simulate how information spreads across nodes, improving the clustering process. Tests on DiNO vision transformers confirm that small-scale propagation (walk length of 1 or 2) yields the most gain without overwhelming the model.

Research: Google’s AI eats your clicks

Performance and payoff

The I-Con framework isn’t just theory. On ImageNet-1K, it beat previous state-of-the-art clustering models like TEMI and SCAN using simpler, self-balancing loss functions. Unlike its predecessors, I-Con doesn’t need manually tuned penalties or size constraints. It just works—across DiNO ViT-S, ViT-B, and ViT-L backbones.

Debiased InfoNCE Clustering (I-Con) improved Hungarian accuracy by:

- +4.5% on ViT-B/14

- +7.8% on ViT-L/14

It also outperformed k-Means, Contrastive Clustering, and SCAN consistently. The key lies in its clean unification of methods and adaptability—cluster probabilities, neighbor graphs, class labels, all fall under one umbrella.

I-Con isn’t just a unifier; it’s a blueprint for invention. By showing that many algorithms are just different ways of choosing neighborhood distributions, it empowers researchers to invent new combinations. Swap one connection type for another. Mix in debiasing. Tune neighborhood depth. Each tweak corresponds to a new entry in the table—a new algorithm ready to be tested.

As MIT’s Shaden Alshammari put it, machine learning is starting to feel less like an art of guesswork and more like a structured design space. I-Con turns learning into exploration—less alchemy, more engineering.

What I-Con really offers is a deeper philosophy of machine learning. It reveals that beneath the vast diversity of models and methods, a common structure may exist—one built not on rigid formulas, but on relational logic. In that sense, I-Con doesn’t solve intelligence. It maps it. And like the first periodic table, it gives us a glimpse of what’s still waiting to be discovered.