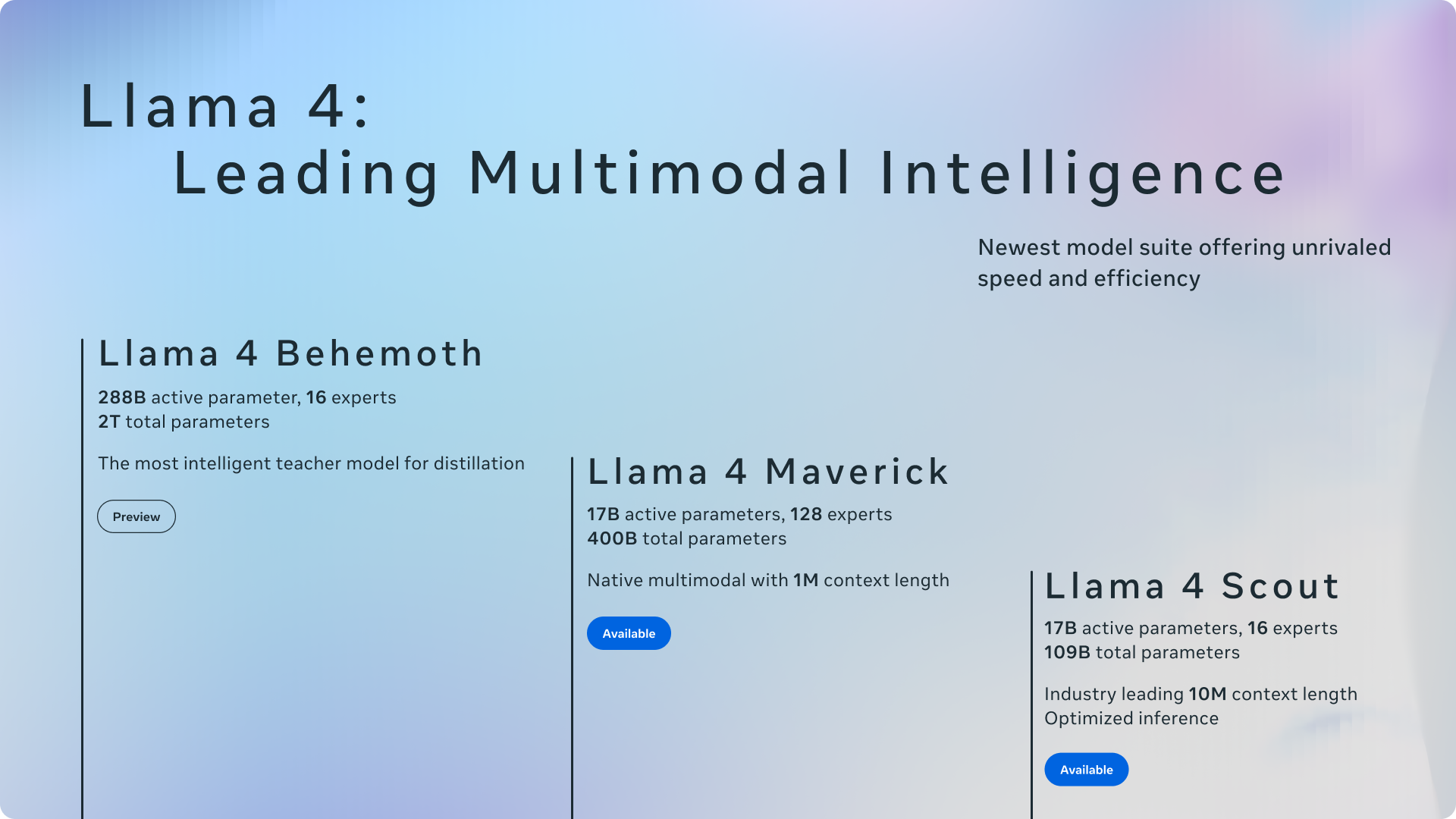

Meta has officially announced its most advanced suite of artificial intelligence models to date: the Llama 4 family. This new generation includes Llama 4 Scout and Llama 4 Maverick, the first of Meta’s open-weight models to offer native multimodality and unprecedented context length support. These models also mark Meta’s initial foray into using a mixture-of-experts (MoE) architecture. Meta also provided a preview of Llama 4 Behemoth, a highly intelligent LLM currently in training, designed to serve as a teacher for future models.

This significant update to the Llama ecosystem arrives roughly a year after the release of Llama 3 in 2024, underscoring Meta’s commitment to open innovation in the AI space. According to Meta, making leading models openly available is crucial for fostering the development of personalized experiences.

Scout and Maverick: Multimodal models with innovative architecture

Llama 4 Scout and Llama 4 Maverick are the initial efficient models in this series. Llama 4 Scout boasts 17 billion active parameters across 16 experts, while Llama 4 Maverick also features 17 billion active parameters but leverages a larger pool of 128 experts within its MoE architecture. Meta highlights the efficiency of this design, noting that Llama 4 Scout can fit on a single H100 GPU (with Int4 quantization) and Llama 4 Maverick on a single H100 host, facilitating easier deployment.

The adoption of a mixture-of-experts (MoE) architecture is a key advancement. In MoE models, only a fraction of the total parameters are activated for each token, leading to more compute-efficient training and inference, ultimately delivering higher quality for a given compute budget. For instance, Llama 4 Maverick has 400 billion total parameters, but only 17 billion are active during use.

Both Llama 4 Scout and Llama 4 Maverick are natively multimodal, incorporating early fusion to seamlessly process text and vision tokens within a unified model backbone. This allows for joint pre-training on vast amounts of unlabeled text, image, and video data. Meta has also enhanced the vision encoder in Llama 4, building upon MetaCLIP but training it separately alongside a frozen Llama model for better adaptation.

Llama 4 Behemoth: A teacher model with promising benchmarks

Meta provided a glimpse into Llama 4 Behemoth, a teacher-focused model with a staggering 288 billion active parameters and nearly two trillion total parameters across 16 experts. Meta claims that Behemoth outperforms leading models like GPT-4.5, Claude Sonnet 3.7, and Gemini 2.0 Pro on STEM-focused benchmarks such as MATH-500 and GPQA Diamond. While Llama 4 Behemoth is still in training and not yet available, it played a crucial role in “codistilling” the Llama 4 Maverick model, leading to significant quality improvements.

Context length and multilingual capabilities

A standout feature of the Llama 4 models is their significantly increased context length. Llama 4 Scout leads the industry with a 10 million token input context window, a dramatic increase from Llama 3’s 128K. This extended context enables advanced capabilities like multi-document summarization and reasoning over large codebases. Both models were pre-trained on a massive dataset exceeding 30 trillion tokens, more than double that of Llama 3, and encompassing over 200 languages, with over 100 languages having more than 1 billion tokens each.

Integration into Meta apps and developer availability

Reflecting Meta’s commitment to open innovation, Llama 4 Scout and Llama 4 Maverick are available for download today on llama.com and Hugging Face. Meta also announced that these models are now powering Meta AI within popular applications like WhatsApp, Messenger, Instagram Direct, and on the Meta.AI website. This integration allows users to directly experience the capabilities of the new Llama 4 models.

Meta emphasized its commitment to developing helpful and safe AI models. Llama 4 incorporates best practices outlined in their AI Protections Developer Use Guide, including mitigations at various stages of development. They also highlighted open-sourced safeguards like Llama Guard and Prompt Guard to help developers identify and prevent harmful inputs and outputs.

Addressing the well-known issue of bias in LLMs, Meta stated that significant improvements have been made in Llama 4. The model refuses fewer prompts on debated political and social topics and demonstrates a more balanced response across different viewpoints, showing performance comparable to Grok in this area.