More than half of enterprise employees utilizing generative AI (GenAI) assistants at work have admitted to entering sensitive company data into publicly available tools, according to a recent survey by TELUS Digital Experience. This survey, conducted in January 2025, gathered responses from 1,000 employees in U.S.-based companies with at least 5,000 staff members.

Shadow AI use surges as employees feed confidential data to GenAI

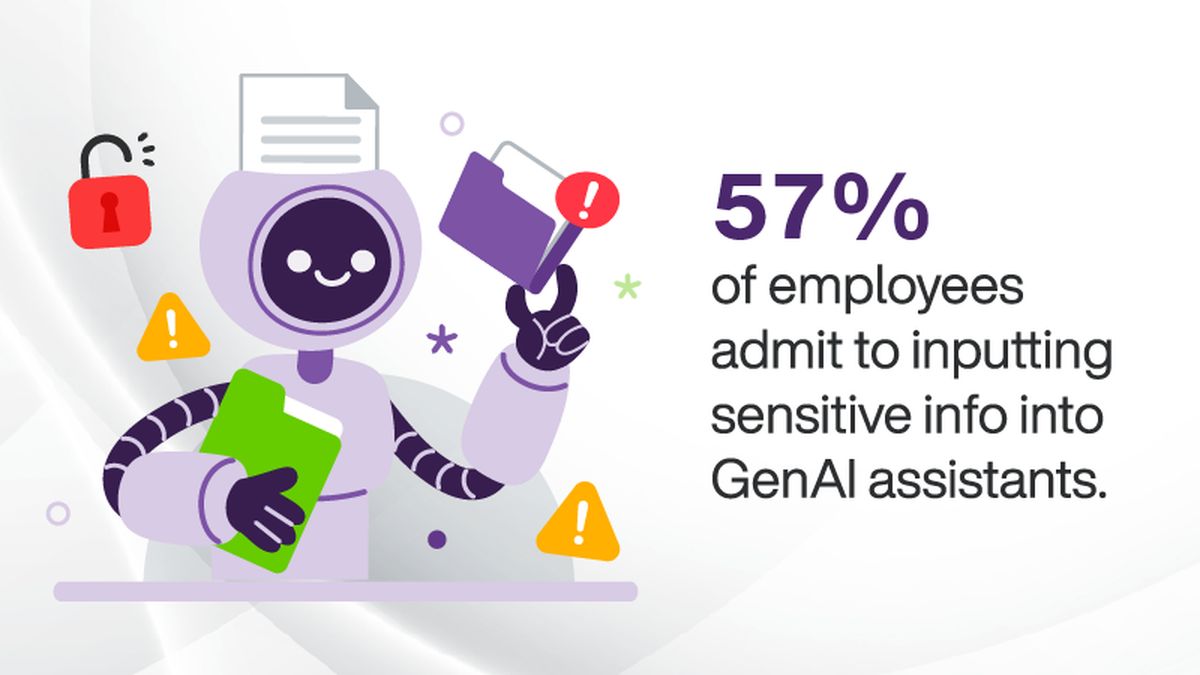

The survey revealed that 57% of these employees input confidential information into AI platforms such as ChatGPT, Google Gemini, and Microsoft Copilot. Furthermore, 68% reported accessing GenAI assistants through personal accounts instead of company-sanctioned platforms, indicating a trend of what is termed ‘shadow AI’, where AI usage occurs without IT and security oversight, thereby heightening risks related to data exposure and compliance violations.

Employees disclosed various categories of sensitive data entered into public GenAI tools: 31% reported entering personal details like names and email addresses, 29% shared project-specific information including unreleased product details, 21% acknowledged inputting customer data like order histories, and 11% admitted to entering financial information such as revenue figures and budgets.

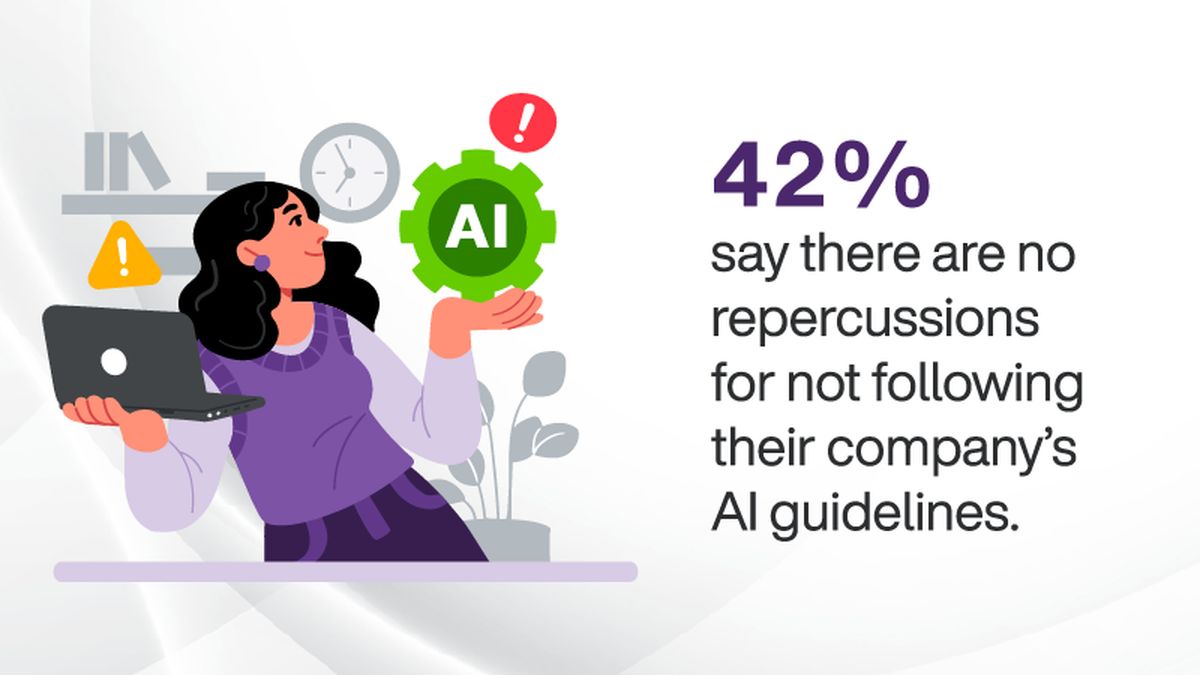

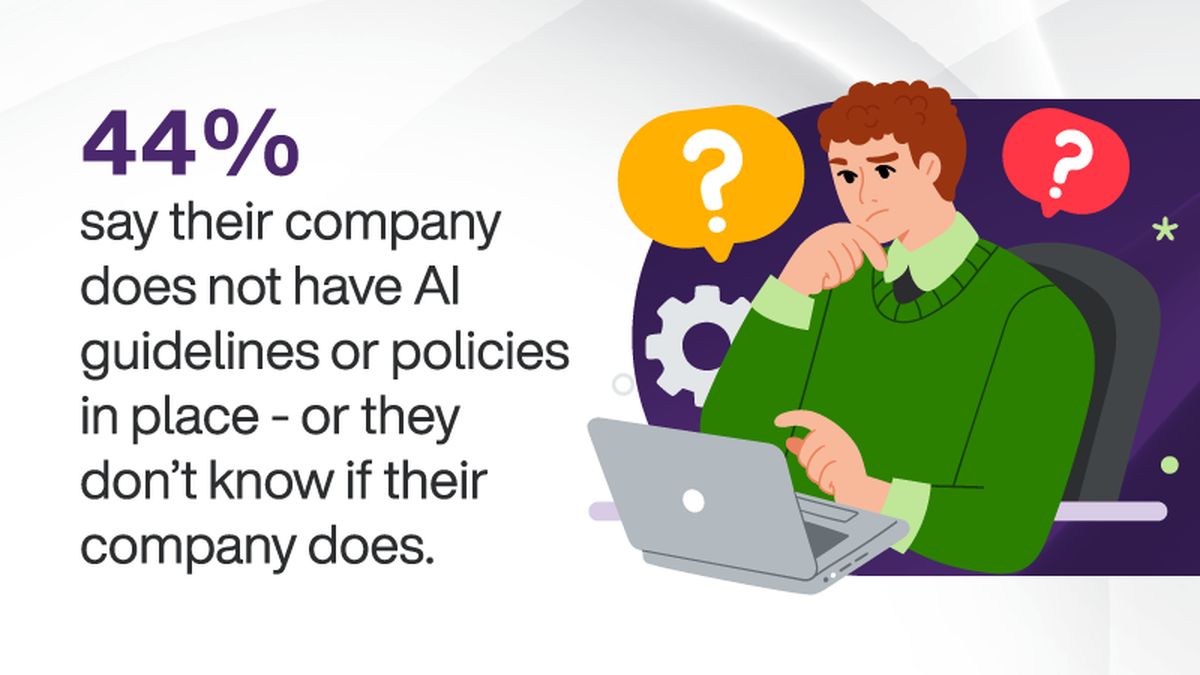

Despite 29% of respondents confirming that their organizations have clear AI usage policies prohibiting the input of sensitive information into GenAI, enforcement of these policies is inconsistent. Only 24% of employees indicated they had received mandatory training on AI usage, while 44% expressed uncertainty regarding the existence of AI policies at their companies. Furthermore, 50% reported being unsure about whether they were adhering to AI-related guidelines, and 42% noted that there were no consequences for failing to follow these policies.

Cyberstalkers, scammers, and data leaks—Why you need privacy protection

In terms of productivity, 60% of employees stated that AI assistants help them work faster, 57% said these tools improve efficiency, and 49% reported enhanced work performance. A significant 84% expressed a desire to continue using AI in their roles, with 51% attributing improvements in creativity to AI and 50% noting that AI facilitates the automation of repetitive tasks.

Bret Kinsella, General Manager of Fuel iX™ at TELUS Digital, emphasized the dual nature of AI adoption, stating, “Generative AI is proving to be a productivity superpower for hundreds of business tasks. If their company doesn’t provide AI tools, they’ll bring their own, which is problematic.” He warned that organizations remain largely unaware of the risks associated with shadow AI despite benefiting from productivity gains.

The survey found that 22% of employees with access to a company-provided GenAI assistant still opted to use personal accounts. Kinsella noted the importance of aligning AI solutions with security and compliance measures, urging organizations to implement structured AI policies, employee training programs, and develop secure AI platforms to address these security gaps effectively.

In conjunction with the survey findings, TELUS Digital is set to present these results at the 2025 Mobile World Congress (MWC25) from March 3-6 in Barcelona. The company will showcase its Fuel iX platform, which focuses on allowing organizations to provide AI access while maintaining data security and compliance. Hesham Fahmy, Chief Information Officer at TELUS, highlighted the need for secure AI solutions that enable safe experimentation while protecting customer trust.

The TELUS Digital AI at Work survey methodology included responses from 1,000 adults aged 18 and older who are employed by large enterprises, confirming their usage of AI assistants at work.

Featured image credit: Zan Lazaveric/Unsplash