Building upon the success of Llama 2, Meta AI unveils Code Llama 70B, a significantly improved code generation model. This powerhouse can write code in various languages (Python, C++, Java, PHP) from natural language prompts or existing code snippets, doing so with unprecedented speed, accuracy, and quality.

Code Llama 70B stands as one of the largest open-source AI models for code generation, setting a new benchmark in this field. Its aim is to automate software creation and modification, ultimately making software development more efficient, accessible, and creative. Imagine describing your desired program to your computer and having it code it for you. Or effortlessly modifying existing code with simple commands. Perhaps even translating code between languages seamlessly. These are just a few possibilities unlocked by models like Code Llama 70B.

However, generating code presents unique challenges. Unlike the ambiguity and flexibility of natural language, code demands precision and rigidity. It must adhere to strict rules and syntax, producing the intended output and behavior. Additionally, code can be complex and lengthy, requiring significant context and logic to grasp and generate. Overcoming these hurdles necessitates models with immense data, processing power, and intelligence.

What is Code Llama 70B?

This cutting-edge large language model (LLM) boasts training on a staggering 500 billion tokens of code and related data, surpassing its predecessors in capability and robustness. Furthermore, its expanded context window of 100,000 tokens empowers it to process and generate longer, more intricate code.

Code Llama 70B builds upon Llama 2, a 175-billion-parameter LLM capable of generating text across various domains and styles. This specialized version undergoes fine-tuning for code generation using self-attention, a technique enabling it to learn relationships and dependencies within code.

Code Llama 70B can be used for a variety of tasks, including:

- Generating code from natural language descriptions

- Translating code between different programming languages

- Writing unit tests

- Debugging code

- Answering questions about code

New heights in accuracy and adaptability

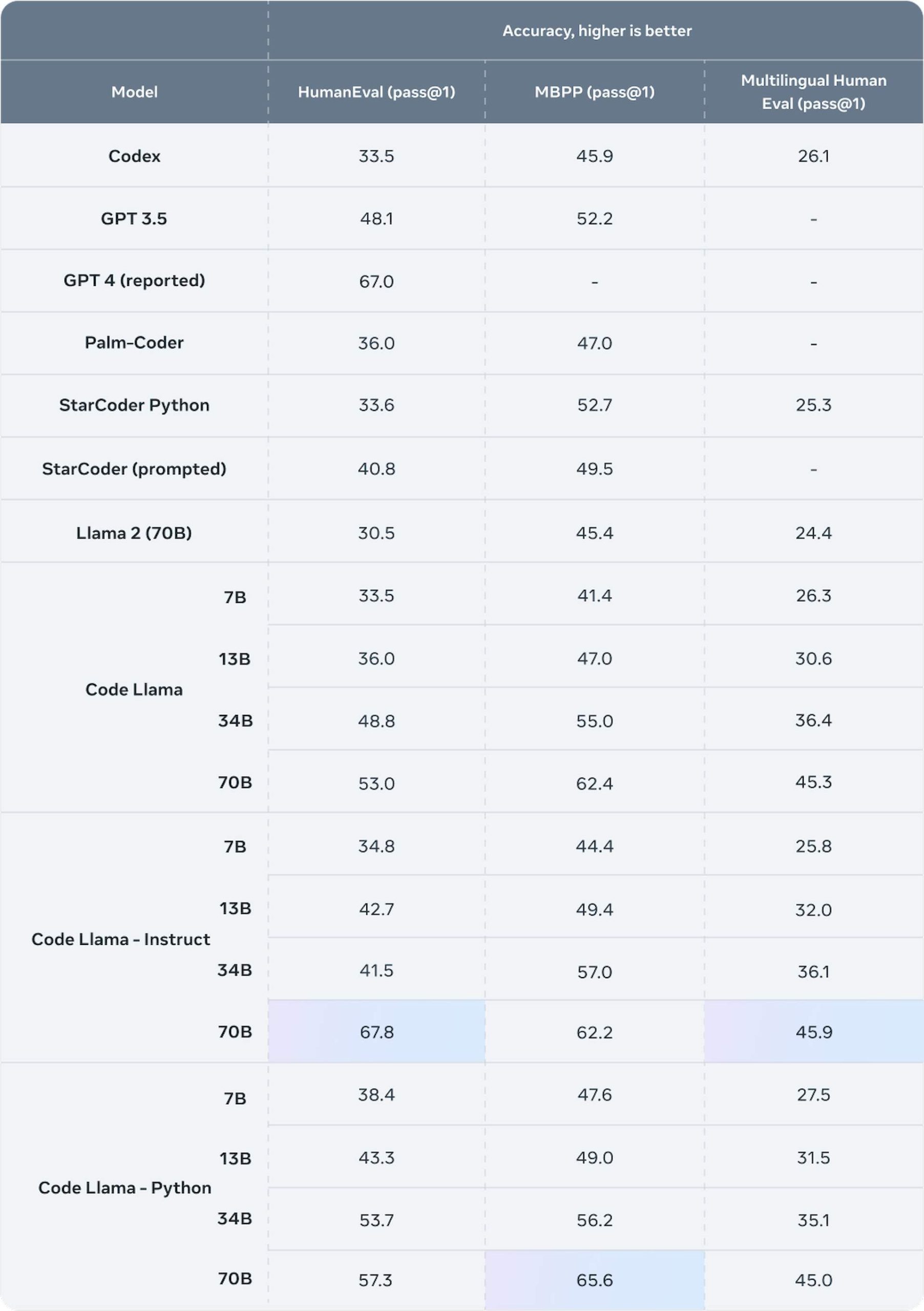

One of Code Llama 70B’s highlights is CodeLlama-70B-Instruct, a variant adept at understanding natural language instructions and generating corresponding code. This variant achieved a score of 67.8 on HumanEval, a benchmark measuring the functional correctness and logic of code generation models using 164 programming problems.

This surpasses previous open-model results (CodeGen-16B-Mono: 29.3, StarCoder: 40.1) and rivals closed models (GPT-4: 68.2, Gemini Pro: 69.4). CodeLlama-70B-Instruct tackles diverse tasks like sorting, searching, filtering, and data manipulation, alongside algorithm implementation (binary search, Fibonacci, factorial).

Code Llama 70B also features CodeLlama-70B-Python, a variant optimized for Python, a widely used language. Trained on an additional 100 billion tokens of Python code, it excels in generating fluent and accurate Python code. Its capabilities span web scraping, data analysis, machine learning, and web development.

Accessible for research and commercial use

Code Llama 70B, under the same license as Llama 2 and prior Code Llama models, is freely downloadable for both researchers and commercial users, allowing for use and modification. Access and utilization are possible through various platforms and frameworks like Hugging Face, PyTorch, TensorFlow, and Jupyter Notebook. Additionally, Meta AI provides documentation and tutorials for model usage and fine-tuning across different purposes and languages.

Mark Zuckerberg, Meta AI CEO, stated in a Facebook post: “We’re open-sourcing a new and improved Code Llama, including a larger 70B parameter model. Writing and editing code has emerged as one of the most crucial uses of AI models today. The ability to code has also proven valuable for AI models to process information in other domains more rigorously and logically. I’m proud of the progress here, and I look forward to seeing these advancements incorporated into Llama 3 and future models as well”.

How to install Code Llama 70B

Here are the steps to install CodeLlama 70B locally for free:

- Request to download from Meta AI or visit the link here to access the model card

- Click on the download button next to the base model

- Open the conversation tab in LM Studio

- Select the Code Llama model that you just downloaded

- Start chatting with the model within the interface of LM studio

Code Llama 70B is poised to significantly impact code generation and the software development industry by providing a powerful and accessible tool for code creation and enhancement. It has the potential to lower the barrier to entry for aspiring coders by offering guidance and feedback based on natural language instructions. Furthermore, Code Llama 70B could pave the way for novel applications and use cases, including code translation, summarization, documentation, analysis, and debugging.

Featured image credit: WangXiNa/Freepik.