Meta has introduced two groundbreaking advancements in the field of generative AI: Emu Video and Emu Edit. These developments mark significant progress in the field of generative AI, providing individuals with transformative capabilities to express their creativity in innovative ways.

Although the use of AI-generated images and videos has been the subject of much criticism, their proper use brings many potential benefits. Users can now draw almost anything they can imagine in seconds, including AI-generated comics, even without any form of education. All a person needs to do to create a stunning visual nowadays is to think and write down a prompt.

We are almost all aware of how focused Meta has been on AI technologies in recent years and a few days ago they announced two powerful video generation and image editing tools in a blog post.

What is Emu Video?

Emu Video is a revolutionary text-to-video generation platform that utilizes diffusion models to streamline the process. The video generation process is divided into two distinct stages:

- Generating images based on text prompts

- Subsequently creating videos conditioned on both text and images

This approach enables Emu Video to achieve a more efficient and effective workflow compared to previous methodologies that required multiple models. Emu Video employs only two diffusion models to produce high-resolution (512×512), four-second videos at a rate of 16 frames per second.

Notably, human evaluations have underscored the exceptional performance of Emu Video’s video generation, surpassing previous benchmarks by a considerable margin. In fact, 96% of respondents preferred Emu Video’s model over Make-A-Video in terms of quality, with an 85% preference in terms of faithfulness to the text prompt.

The versatility of Emu Video is further demonstrated by its ability to “animate” user-provided images based on text prompts.

Key features include:

- Unified architecture for video generation tasks,

- Support for:

- Text-only

- Image-only

- Combined inputs

- A factorized approach for efficient training

- State-of-the-art performance in human evaluations

Emu Video represents a paradigm shift in text-to-video generation, leveraging diffusion models to streamline the process. The platform’s two-stage approach to video generation is a significant departure from previous methodologies that required multiple models.

In the first stage, Emu Video generates images based on text prompts, while in the second stage, it creates videos conditioned on both text and images. This approach enables Emu Video to achieve a more efficient and effective workflow, resulting in high-quality videos that surpass previous benchmarks by a considerable margin.

Emu Video employs only two diffusion models to produce high-resolution (512×512), four-second videos at a rate of 16 frames per second. This streamlined approach to video generation has been well-received by users, with 96% of respondents preferring Emu Video’s model over Make-A-Video in terms of quality. Additionally, 85% of respondents preferred Emu Video’s model in terms of faithfulness to the text prompt.

Emu Video’s versatility is further demonstrated by its ability to “animate” user-provided images based on text prompts. This feature allows users to create videos that are tailored to their specific needs and preferences. Emu Video also boasts a unified architecture for video generation tasks, support for text-only, image-only, and combined inputs, a factorized approach for efficient training, and state-of-the-art performance in human evaluations.

You may learn more about Emu Video using the link here.

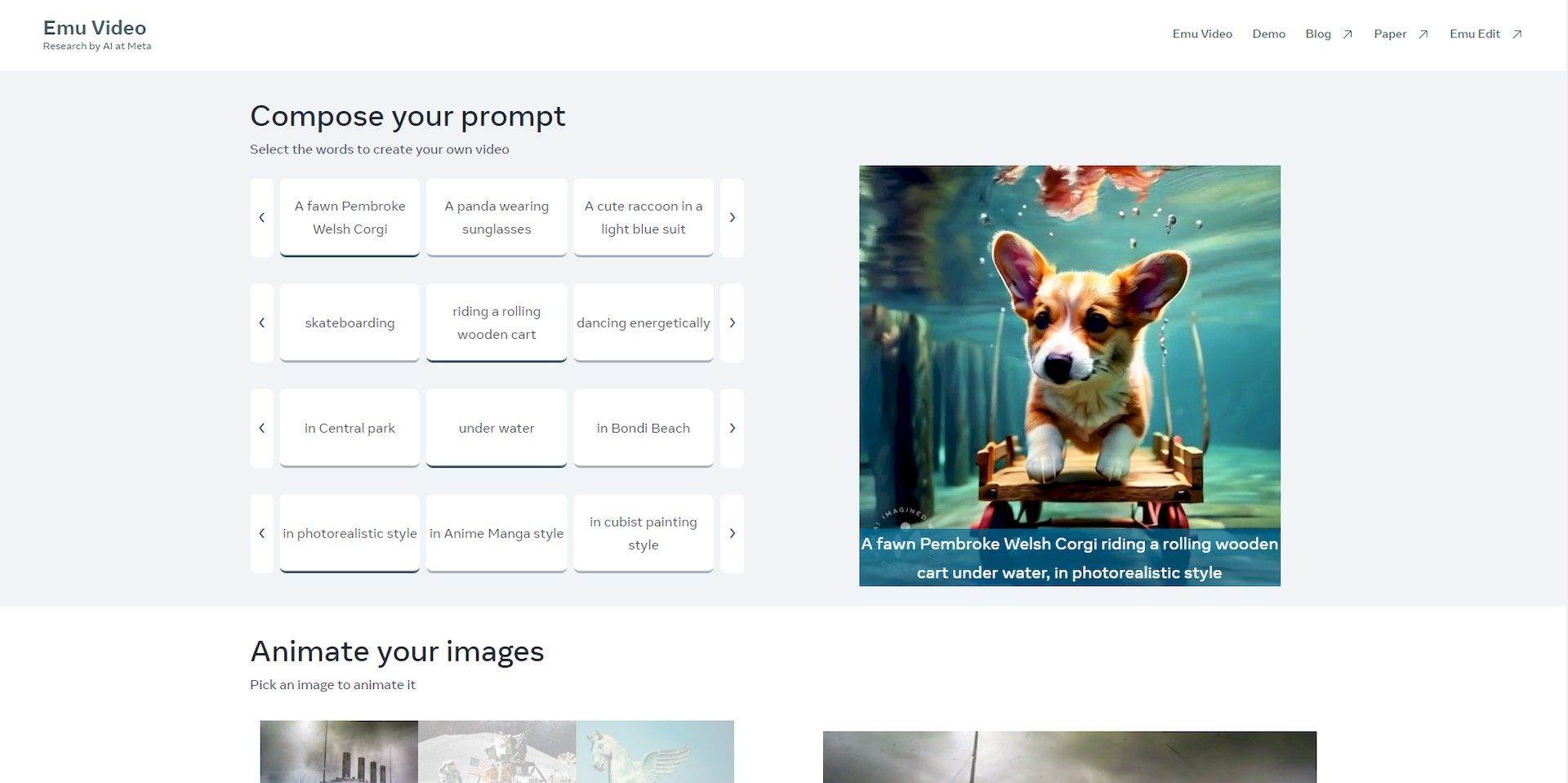

Meta has also released a demo site for users who want to check out Emu’s video generation capabilities.

In the demo, there are many generated video options where you can choose the subject, activity, location, and generation style, and there is also a search tab for generated videos at the bottom of the site. Even if you can’t generate your own video, you can use this link to see the videos that Meta generated during the research.

What is Emu Edit?

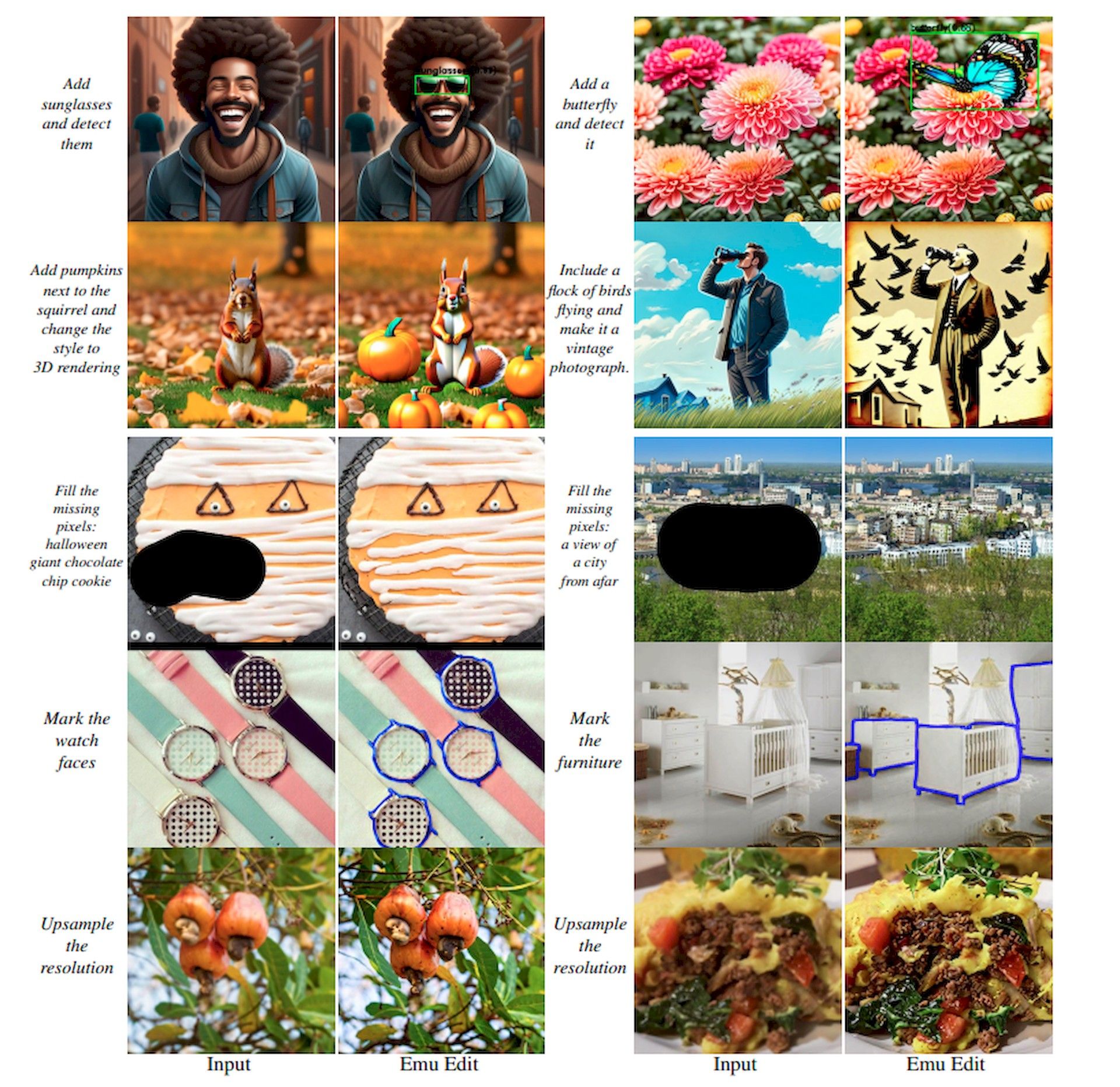

Emu Edit is a powerful image editing platform that offers precise control over image editing tasks through recognition and generation techniques. Unlike traditional image manipulation methods that often result in over-modification or under-performance, Emu Edit precisely follows instructions, ensuring that only relevant pixels are altered. This means that when adding text to a baseball cap, the cap itself remains unchanged. Meta’s key insight is integrating computer vision tasks as instructions to image generation models, offering unprecedented control in image generation and editing.

Emu has been trained on a large dataset of synthesized samples (10 million) to train Meta’s model, resulting in superior edit results in terms of instruction accuracy and image quality. In Meta’s evaluations, Emu demonstrated state-of-the-art performance for a range of image editing tasks, outperforming current methods.

Emu Edit’s key features include:

- Free-form editing through instructions

- Precise pixel alteration

- Unprecedented control of computer vision tasks

The platform’s exceptional editing results and state-of-the-art performance make it an excellent choice for users looking to create high-quality images.

Users can read the Emu Edit paper to learn more about the latest generation model from Meta. The paper provides a detailed overview of Emu Edit’s architecture, training methodology, and performance metrics. It also includes examples of Emu Edit’s capabilities, demonstrating the platform’s versatility and power.

Meta’s AI ambition

Meta (formerly Facebook) has invested heavily in artificial intelligence (AI) research and development in recent years, creating a wide range of AI-focused products and initiatives. These products are being used to improve the company’s core products, such as Facebook, Instagram, and WhatsApp, as well as to develop new products and services.

Meta’s AI-powered translation tool SeamlessM4T seamlessly bridges language barriers, enabling over 100 languages to be translated with remarkable accuracy.

AI-powered image and video recognition capabilities also empower Meta to identify objects, people, and scenes within visual content. This technology plays a pivotal role in refining ad relevance, facilitating content discovery, and combating the spread of inappropriate material.

Meta’s AI chatbots serve as digital assistants, providing customer support, answering inquiries, and executing tasks with efficiency and precision. These chatbots are readily accessible across Facebook Messenger, WhatsApp, and other Meta platforms.

Meta’s AI ambitions also extend to the development of advanced language models, such as Llama 2. Leveraging the power of AI, Llama 2 has demonstrated remarkable capabilities in natural language understanding, question answering, and text generation.

We don’t yet know what the future holds, but one thing is certain: the development of technology is at an accelerating pace and soon it will be much easier to create a AAA game or a movie that will rival a Hollywood masterpiece.

Feature image credit: Igor Surkov/Unsplash.