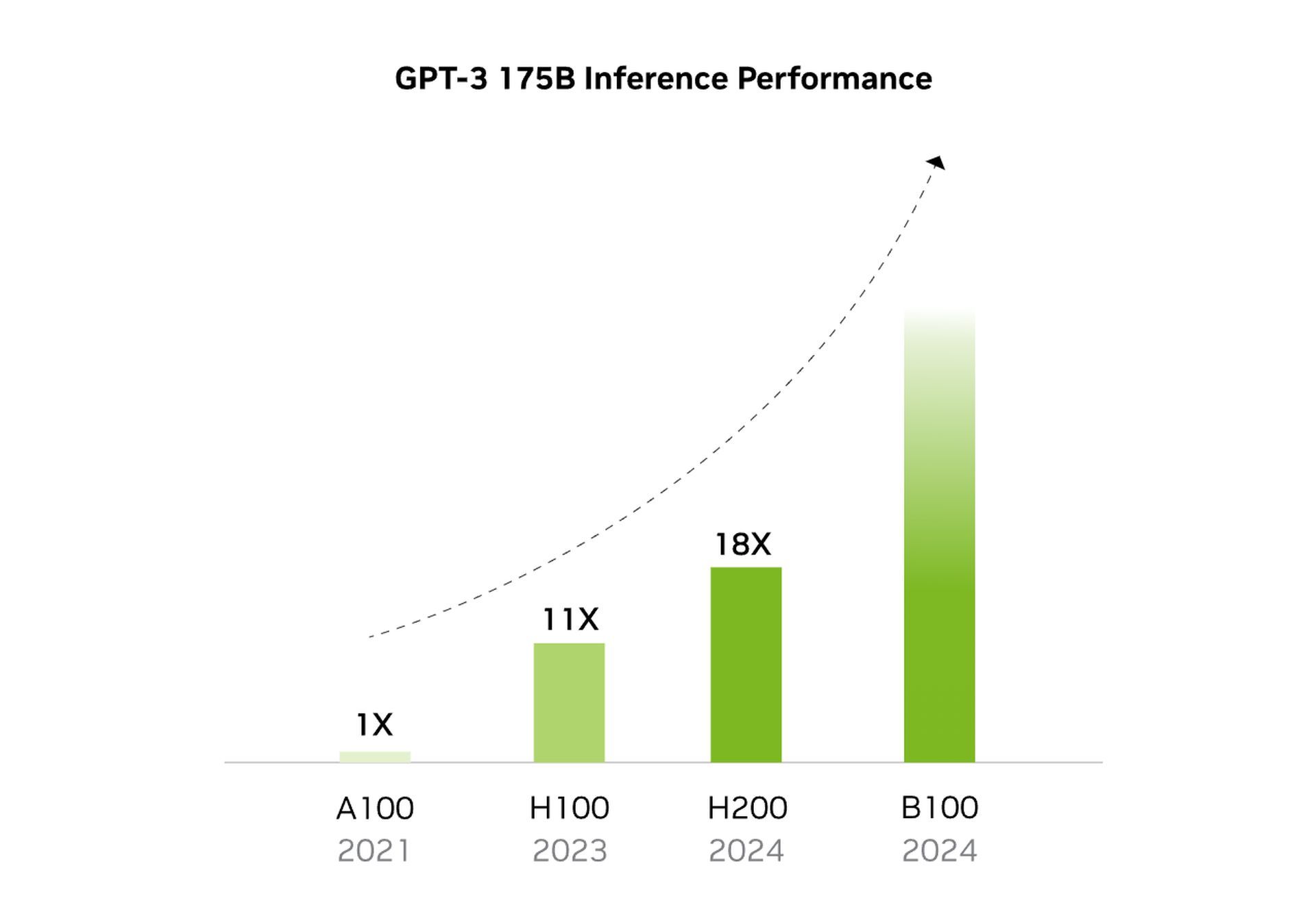

Nvidia H200 emerges as a groundbreaking chip, marking a significant leap in supercomputing technology. This latest innovation from Nvidia is tailored to power supercomputers that drive generative AI models, showcasing the prowess of the Nvidia Hopper architecture. Central to this platform is the Nvidia H200 Tensor Core GPU, which brings advanced memory capabilities, setting a new standard in handling voluminous data for both generative AI and high-performance computing tasks.

Touted in Nvidia’s recent press release as the inaugural GPU to harness HBM3e, it offers enhanced, larger memory solutions, crucial for accelerating generative AI and powering extensive language models. This advancement is not just a stride in AI; it significantly propels scientific computing within high-performance computing (HPC) workloads. The Nvidia H200 stands out with its impressive 141GB of memory bandwidth at a remarkable speed of 4.8 terabytes per second. This performance is not just an incremental improvement; it’s a substantial leap, offering nearly double the capacity and 2.4 times more bandwidth compared to its predecessor, the Nvidia A100.

What does Nvidia H200 offer?

Let’s break down the main purpose and features of Nvidia H200 altogether.

Better performance and memory

The Nvidia H200, grounded in the innovative Nvidia Hopper architecture, stands as the inaugural GPU to offer an impressive 141 gigabytes of HBM3e memory, clocking in at a rapid 4.8 terabytes per second. This marks a near doubling in capacity compared to the Nvidia H100 Tensor Core GPU, complemented by a 1.4X enhancement in memory bandwidth. The H200 distinguishes itself by its ability to expedite generative AI and large language models (LLMs), while simultaneously advancing scientific computing for high-performance computing (HPC) workloads. It achieves this with improved energy efficiency and a more economical total ownership cost.

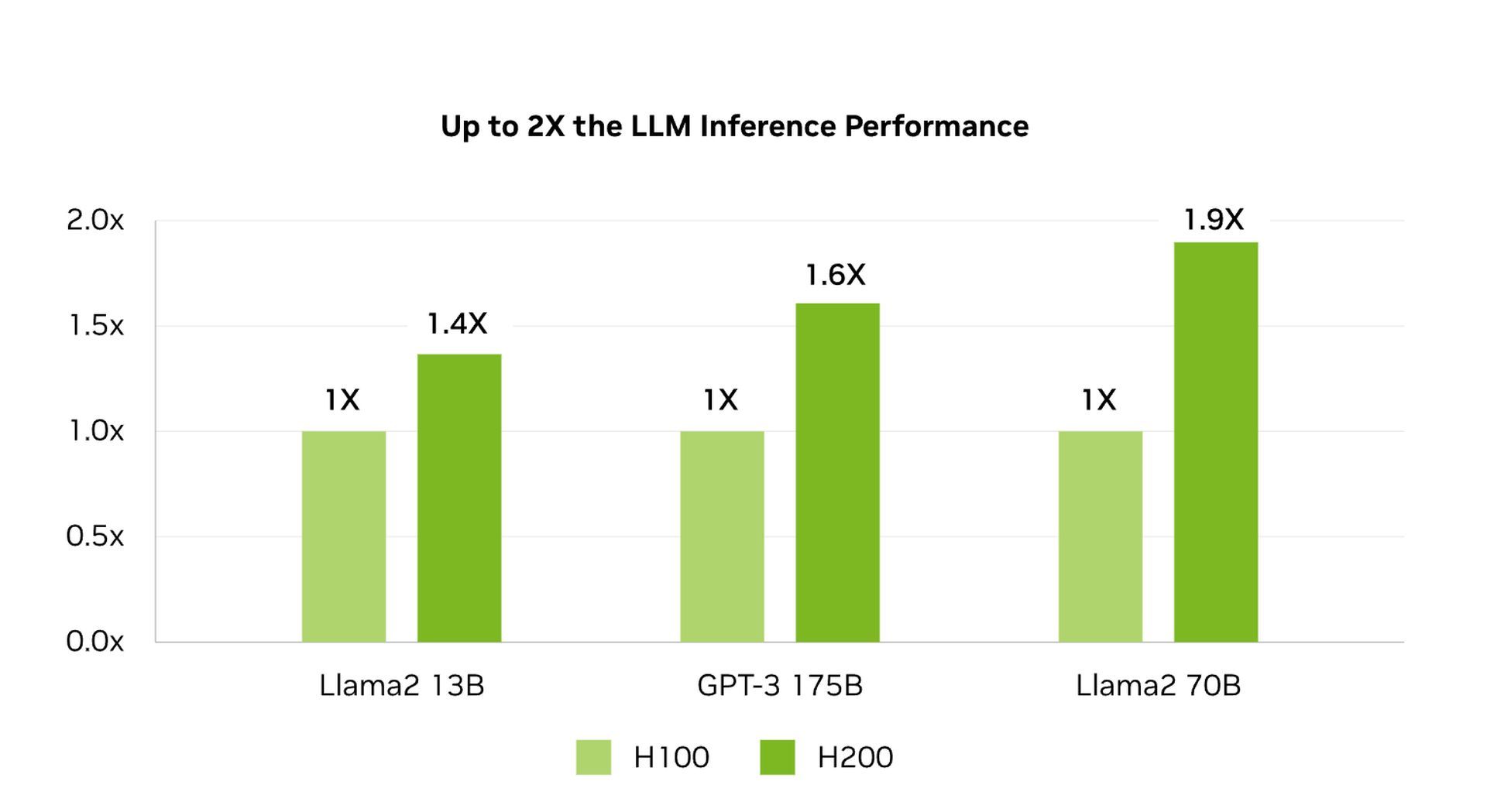

Elevating LLM inference speeds

Businesses are increasingly turning to LLMs for a wide array of inference needs. For these applications, an AI inference accelerator like the H200 is crucial. It stands out by offering the highest throughput at the lowest total cost of ownership (TCO), particularly when scaled for extensive user bases. The H200 notably enhances inference speeds, achieving up to twice the rate of the H100 GPUs in handling LLMs, such as Llama2.

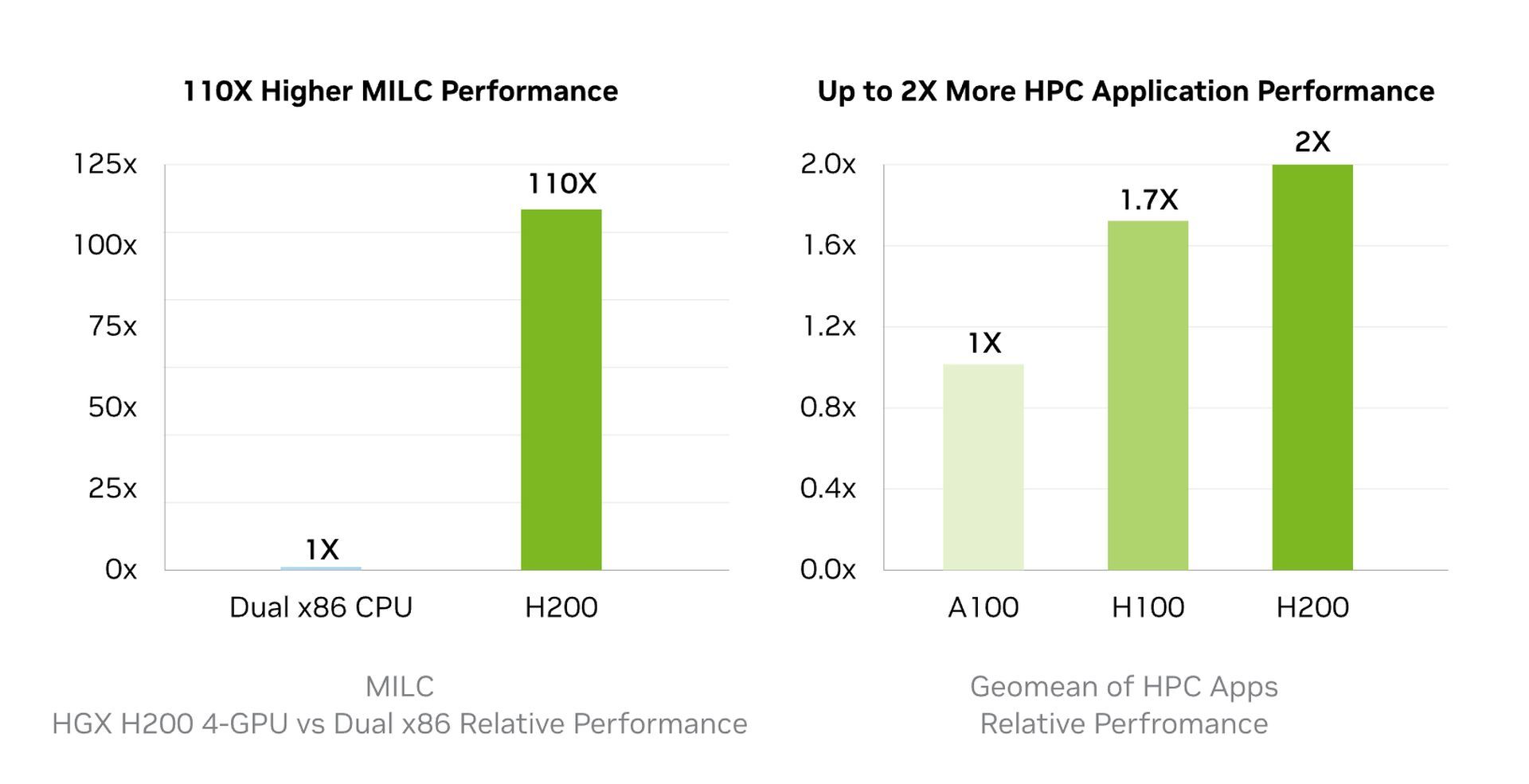

Advancing high-performance computing

The Nvidia H200 is not just about memory size; it’s about the speed and efficiency of data transfer, which is vital for high-performance computing applications. This GPU excels in memory-intensive tasks like simulations, scientific research, and AI, where its higher memory bandwidth plays a pivotal role. The H200 ensures efficient data access and manipulation, leading to up to 110 times faster results compared to traditional CPUs, a substantial improvement for complex processing tasks.

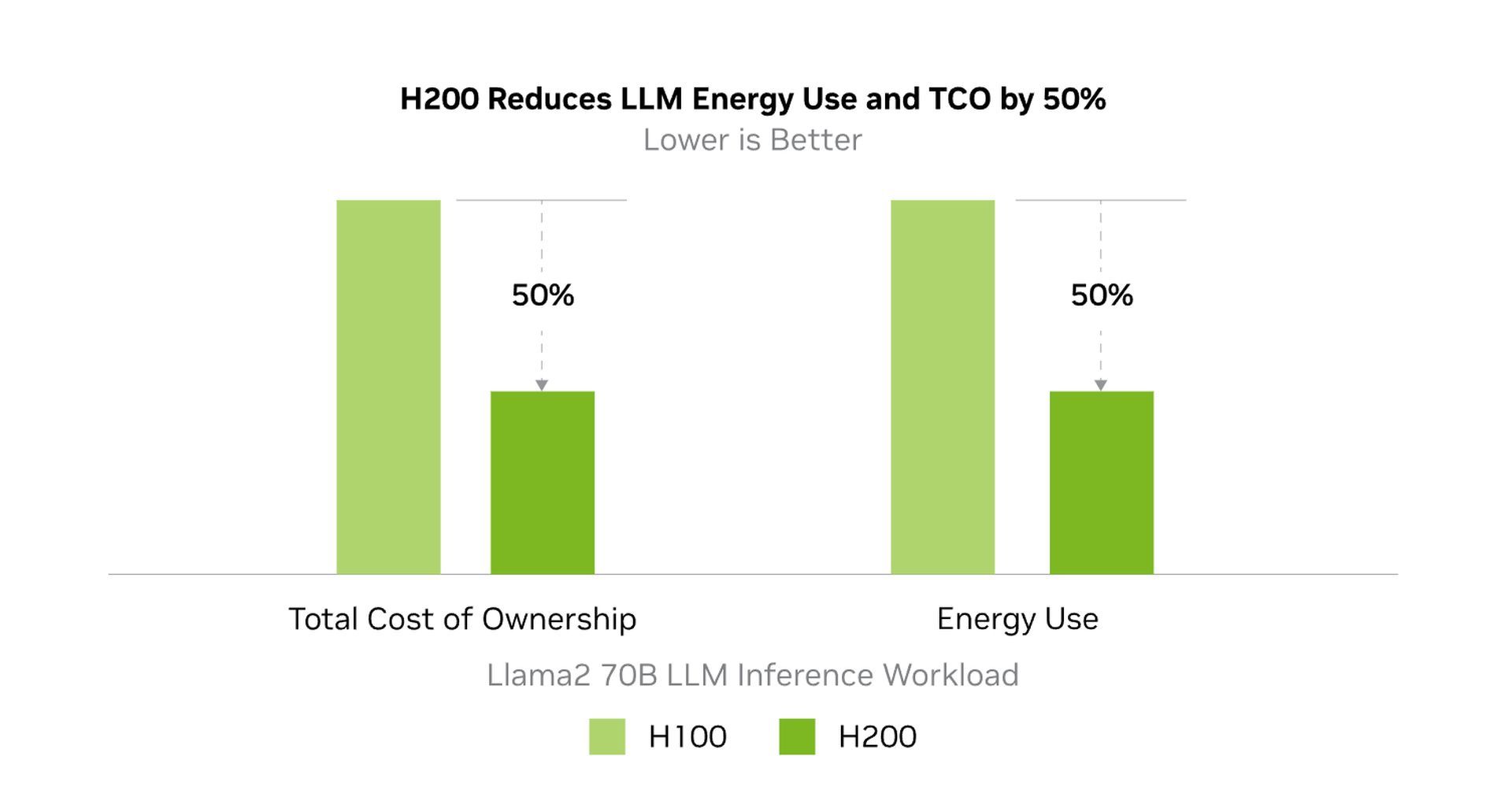

Enhancing efficiency and reducing costs

Nvidia’s H200 introduces a new era in energy efficiency and total cost of ownership (TCO). This advanced technology maintains the same power profile as the H100 while delivering significantly enhanced performance. The result is a new generation of AI factories and supercomputing systems that are not just faster, but also more eco-friendly. This blend of speed and sustainability offers a competitive advantage, driving progress in both the AI and scientific communities.

Sustained growth through continuous innovation

At the heart of the H200 is the Nvidia Hopper architecture, a testament to Nvidia’s commitment to perpetual innovation. This architecture represents a significant performance advancement over previous models. It continues to evolve, as seen with the recent enhancements to the H100, including the release of powerful open-source libraries like Nvidia TensorRT-LLM™. The debut of the H200 further elevates this trajectory, promising not just immediate performance leadership but also sustained gains through ongoing software improvements. Investing in the H200 equates to securing a place at the forefront of technological advancement, both now and in the future.

Streamlining AI with enterprise-grade software

The combination of Nvidia AI Enterprise and the Nvidia H200 redefines the landscape of AI development and deployment. This pairing simplifies the creation of AI-ready platforms, significantly accelerating the development and deployment of production-ready applications in fields like generative AI, computer vision, and speech AI. More than just enhancing speed, this duo provides enterprise-grade security, manageability, and stability. The synergy between Nvidia AI Enterprise and the H200 allows businesses to extract actionable insights more swiftly, translating into tangible business value at an accelerated pace.

Specs of Nvidia H200

| Form Factor | H200 SXM¹ |

| FP64 | 34 TFLOPS |

| FP64 Tensor Core | 67 TFLOPS |

| FP32 | 67 TFLOPS |

| TF32 Tensor Core | 989 TFLOPS² |

| BFLOAT16 Tensor Core | 1,979 TFLOPS² |

| FP16 Tensor Core | 1,979 TFLOPS² |

| FP8 Tensor Core | 3,958 TFLOPS² |

| INT8 Tensor Core | 3,958 TFLOPS² |

| GPU Memory | 141GB |

| GPU Memory Bandwidth | 4.8TB/s |

| Decoders | 7 NVDEC 7 JPEG |

| Max Thermal Design Power (TDP) | Up to 700W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGs @16.5GB each |

| Form Factor | SXM |

| Interconnect | Nvidia NVLink®: 900GB/s PCIe Gen5: 128GB/s |

| Server Options | Nvidia HGX™ H200 partner and Nvidia -Certified Systems™ with 4 or 8 GPUs |

| Nvidia AI Enterprise | Add-on |

Nvidia H200 release date

Set for release in the second quarter of 2024, the Nvidia H200 will be accessible through global system manufacturers and cloud service providers. Leading the charge, Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure are poised to be among the first to offer H200-based instances starting next year. This widespread availability marks a significant milestone in the distribution of advanced GPU technology.

Nvidia and Foxconn unite to create AI factories

Equipped with Nvidia’s NVLink and NVSwitch high-speed interconnects, the HGX H200 stands out in its class. It delivers unparalleled performance across a variety of application workloads, including both training and inference for large language models exceeding 175 billion parameters. An eight-way HGX H200 configuration boasts over 32 petaflops of FP8 deep learning compute and 1.1TB of high-bandwidth memory, setting a new standard in generative AI and high-performance computing, as per Nvidia’s announcement.

Nvidia’s GPUs are increasingly pivotal in the generative AI model development and deployment. Designed to manage massive parallel computations, these GPUs are ideally suited for a range of tasks including image generation and natural language processing. The parallel processing architecture of Nvidia’s GPUs significantly accelerates the training and operation of generative AI models, performing multiple calculations concurrently, thereby enhancing efficiency and speed in AI model development.

Featured image credit: Nvidia