DALL-E Mini has entered the AI image generation race as an unexpected contender, boasting its own distinct capabilities and limitations. In a comparative analysis, DALL-E Mini, particularly the version developed by Craiyon, exhibits remarkable potential, especially in relation to image quality and simplicity of prompts.

Especially in the last year, artificial intelligence and the benefits of this technology are changing our lives. There are now bots that do the repetitive tasks that used to take time and effort instead of us, and many sectors have started to benefit from this.

This technology, whose widespread use has increased with the AI trend initiated by OpenAI, has introduced us to generative models that serve many purposes such as text-to-image and text-to-sound. Although Midjourney’s image-generation technology has been criticized by artists, image-generation tools allow you to create any image you can imagine, even if you have no talent or training.

What is DALL-E mini?

DALL-E mini is a text-to-image AI model created by Boris Dayma. It is trained on a massive dataset of text and images and can generate photorealistic images from text descriptions. The model is still under development, but it has already generated some impressive results.

DALL·E mini is a powerful tool that can be used for a variety of purposes, including:

- Creating illustrations for books, articles, and websites

- Generating concept art for video games and movies

- Designing product prototypes

- Visualizing abstract ideas

The model is still under development, but it has already generated some impressive results. For example, it can generate images that are both photorealistic and creative. It can also generate images that are based on complex text descriptions, such as “A cat wearing a top hat and riding a bicycle”.

See how Boris Dayma explains DALL-E mini to Weights & Biases YouTube channel below.

How does DALL-E mini compare to DALL-E 2?

The comparison between self-hosted DALL-E Mini and its counterpart, DALL-E 2, reveals distinct advantages and considerations. One significant aspect is the ability to fine-tune models to suit specific needs. The capacity for custom data and fine-tuning in DALL-E Mini, especially in different languages or specialized domains, offers a critical advantage. This ability to ”steer” models to generate specific outputs, such as ”lawyer robot” or nuanced imagery, stands out as a powerful tool.

However, this innovation comes with its own set of considerations, notably the infrastructure requirements. While DALL-E 2 operates through a simple network request, DALL-E Mini demands a higher computational infrastructure, necessitating a robust GPU or a powerful multi-CPU server for efficient performance.

At the core of DALL-E Mini’s functionality lies a sequence-to-sequence decoder network built upon the bidirectional and auto-regressive transformer model (BART). This intricate pipeline comprises four components:

- Image-to-token encoder

- Text encoder

- Seq2seq BART decoder

- Tokens-to-image decoder

During training, DALL-E Mini utilizes approximately 15 million caption-image pairs to train the crucial BART seq2seq decoder. This involves the generation of image tokens from images via a VQGAN encoder and the conversion of text descriptions to text embedding tokens via a BART encoder. The seq2seq decoder then generates a sequence of image tokens based on these embeddings.

For inference, with just a text prompt at hand, the BART text encoder generates embeddings for the prompt, initiating the sequence prediction process to create images.

Impressive performance

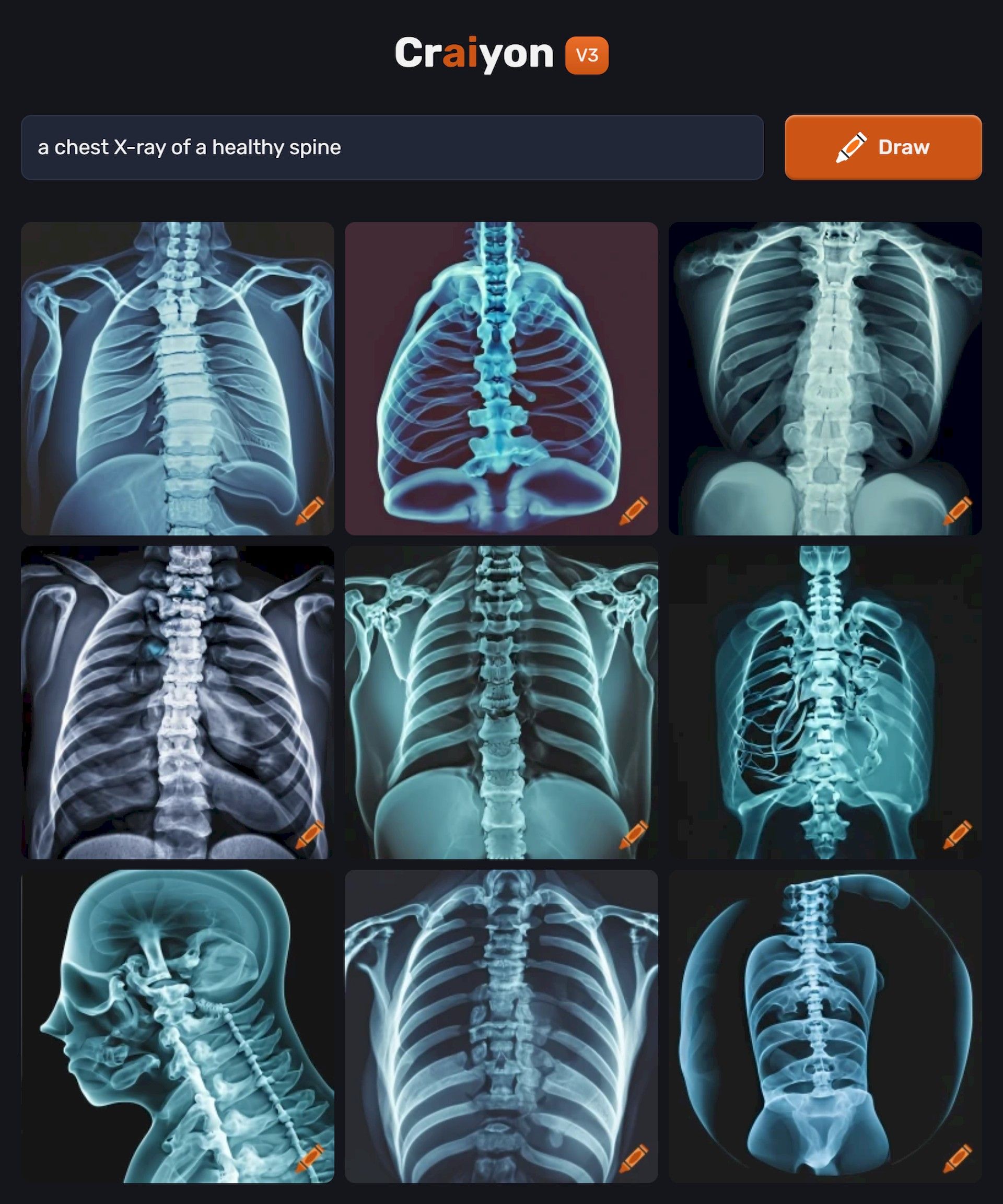

DALL-E Mini’s performance is particularly fascinating when tested against varying prompt complexities. From relatively simple prompts, such as visualizing a ”girl playing golf” where it encounters challenges in hand placement relative to the golf club, to more specialized prompts in healthcare, like ”a chest X-ray of a healthy spine” which yields impressive and anatomically ”almost” accurate results, the model exhibits its strengths and limitations.

How to use DALL-E mini for free?

There are two main ways to use DALL-E mini for free:

- Use the Craiyon website: The easiest way to use DALL-E mini is to use the Craiyon website. You do not need to create an account to use the website, but you will need to complete a CAPTCHA each time you generate an image

- Use the DALL-E mini API: If you are a developer, you can use the DALL-E mini API to integrate the model into your own applications. The API is currently in beta, but it is free to use

Here are the steps on how to use the Craiyon website to generate an image with DALL-E mini:

- Go to the Craiyon website or HugginFace hub of DALL-E mini

- Enter a text description of the image you want to generate

- Click the “Generate” button

- The model will generate four images based on your text description

- You can save the images to your computer or share them with others

So if you need a free image generation tool, DALL-E mini seems to be here to meet your needs. As we enter the last months of 2023, it is exciting for all of us to observe how AI technologies are developing. Let’s see what more time will show us in artificial intelligence.

Featured image credit: Joanna Kosinska/Unsplash.