Meta’s new Segment Anything Model was revealed. The SAM model is a new way to create high-quality masks for image segmentation.

Reminder: Image segmentation is a fundamental task in computer vision that aims to partition an image into regions that correspond to different objects or semantic categories and has many applications, such as object detection, scene understanding, image editing, and video analysis.

However, image segmentation is also a challenging problem, especially when dealing with complex scenes that contain multiple objects with varying shapes, sizes, and appearances. Moreover, most existing image segmentation methods require large amounts of annotated data for training, which can be costly and time-consuming to obtain. Meta wants to solve this issue with the SAM model.

SAM model: What is Meta’s new Segment Anything Model?

Segment Anything Model (SAM) is a new and powerful artificial intelligence model that can segment any object in an image or video with high quality and efficiency. Segmentation is the process of separating an object from its background or other objects and creating a mask that outlines its shape and boundaries. With the SAM model, your editing, compositing, tracking, recognition, and analysis tasks will get easier.

SAM is different from other segmentation models in several ways, such as:

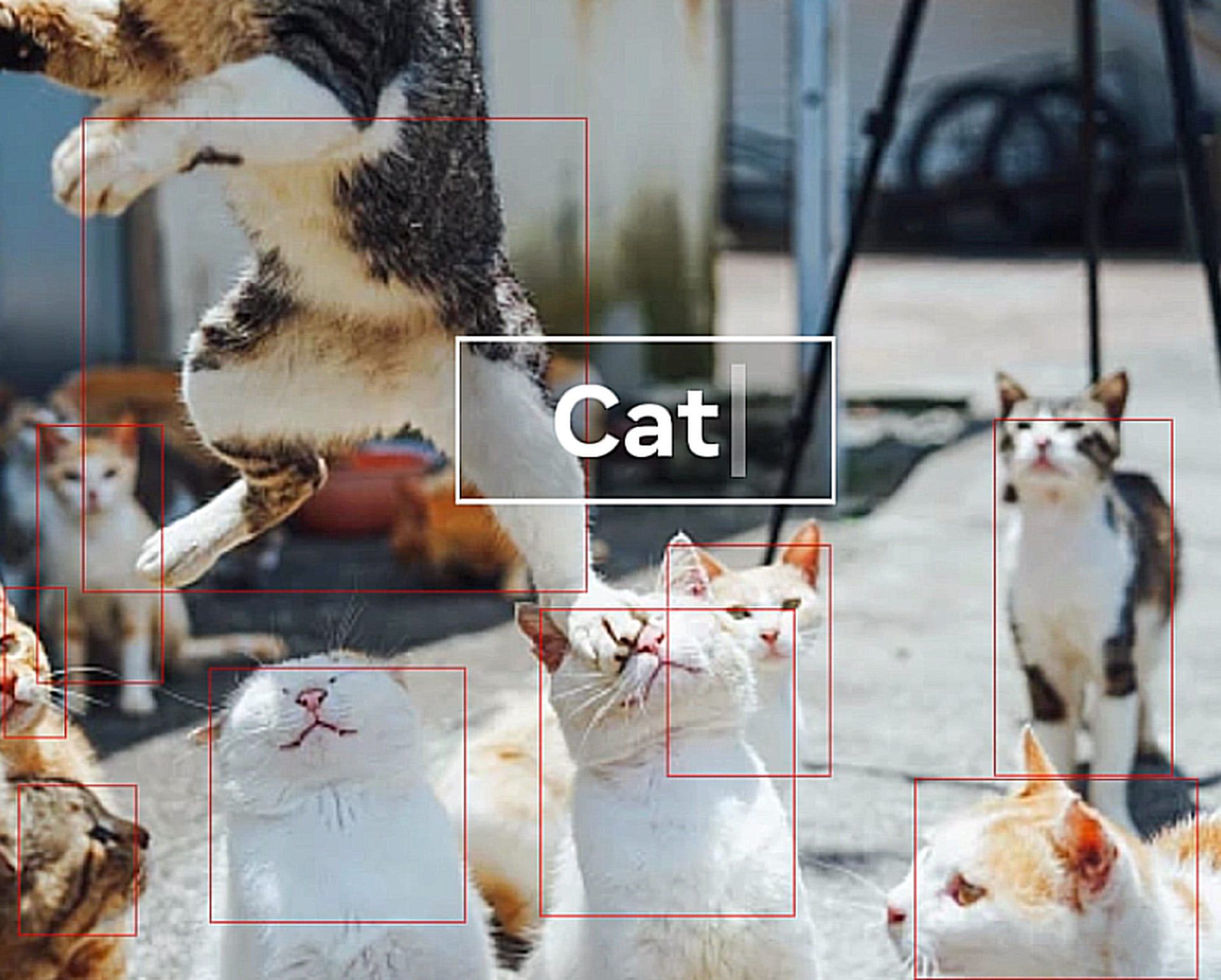

- SAM is promptable, which means it can take various input prompts, such as points or boxes, to specify what object to segment. For example, you can draw a box around a person’s face, and the Segment Anything Model will generate a mask for the face. You can also give multiple prompts to segment multiple objects at once. The SAM model can handle complex scenes with occlusions, reflections, and shadows.

- SAM is trained on a massive dataset of 11 million images and 1.1 billion masks, which is the largest segmentation dataset to date. This dataset covers a wide range of objects and categories, such as animals, plants, vehicles, furniture, food, and more. SAM can segment objects that it has never seen before, thanks to its generalization ability and data diversity.

- SAM has strong zero-shot performance on a variety of segmentation tasks. Zero-shot means that SAM can segment objects without any additional training or fine-tuning on a specific task or domain. For example, SAM can segment faces, hands, hair, clothes, and accessories without any prior knowledge or supervision. SAM can also segment objects in different modalities, such as infrared images or depth maps.

The SAM model achieves impressive results on various image segmentation benchmarks, such as COCO. SAM also outperforms or matches prior fully supervised methods on several zero-shot segmentation tasks, such as segmenting logos, text, faces, or sketches. It demonstrates its versatility and robustness across different domains and scenarios.

In the future: The Segment Anything Model (SAM model) project is still in its early days. According to Meta, these are some of the future applications of the Segment Anything Model:

- Future AR glasses may employ SAM to recognize commonplace objects and provide helpful reminders and instructions.

- SAM has the ability to affect many other fields, such as agriculture and biology. One day, it might even benefit farmers and scientists.

The SAM model can be a breakthrough in computer vision and artificial intelligence research. It demonstrates the potential of foundation models for vision, which are models that can learn from large-scale data and transfer to new tasks and domains.

Segment Anything Model (SAM model) features

Here are some of the SAM model’s capabilities:

- Using the SAM model, users may quickly and easily segment objects by selecting individual points to include or omit from the segmentation. A boundary box can also be used as a cue for the model.

- When uncertainty exists regarding the item being segmented, the SAM model can produce many valid masks, a crucial and critical skill for solving segmentation in the real world.

- Automatic object detection and masking are now simple with the Segment Anything Model.

- After precomputing the image embedding, the Segment Anything Model can provide a segmentation mask for any prompt instantly, enabling real-time interaction with the model.

Impressive, isn’t it? So what is the technology behind it?

How does the SAM model work?

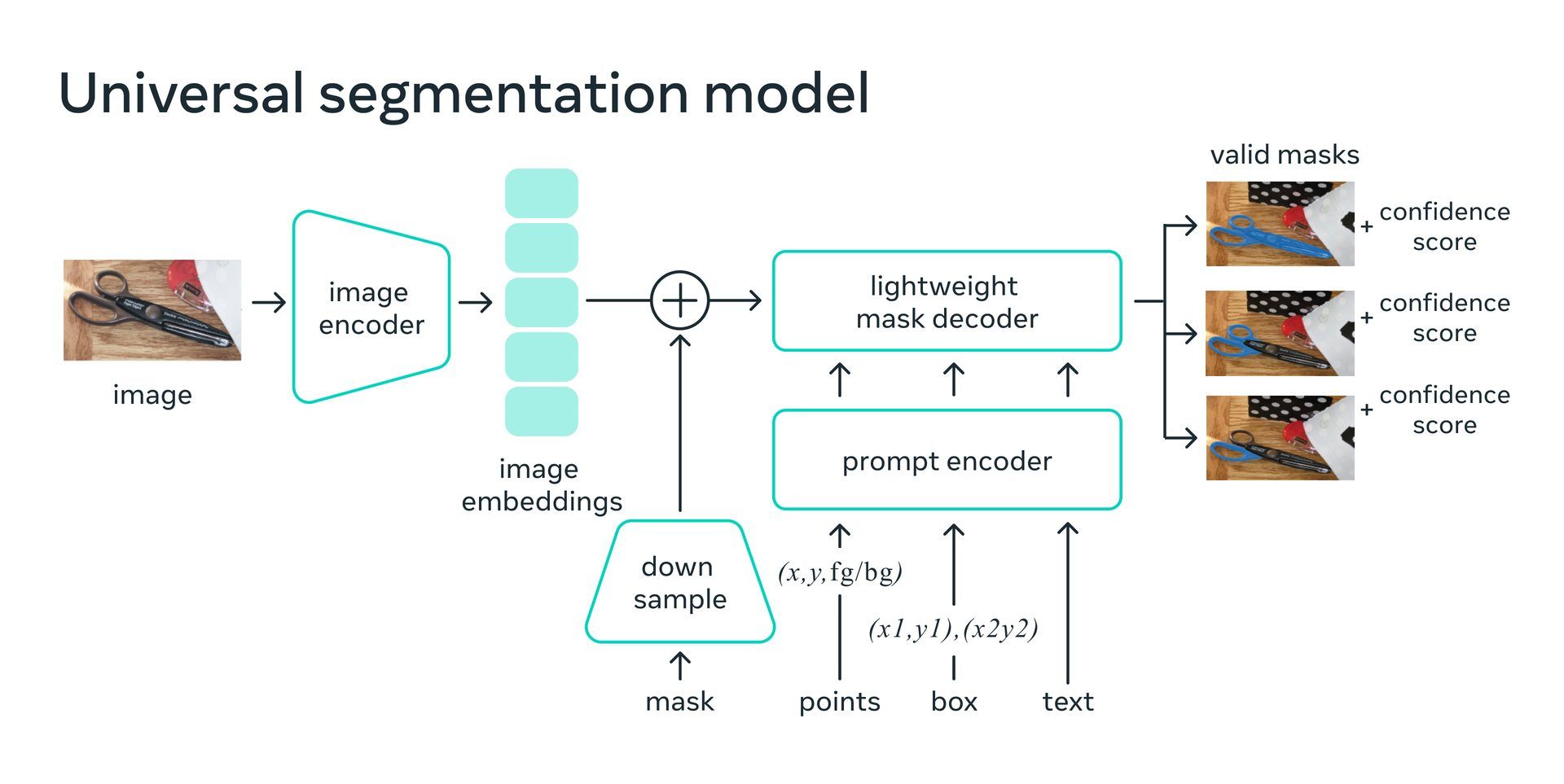

One of the most intriguing discoveries in NLP and, more recently, in computer vision is the use of “prompting” approaches to enable zero-shot and few-shot learning on novel datasets and tasks using foundation models. Meta found motivation in this field.

If given foreground/background points, a rough box or mask, freeform text, or any other input indicating what to segment in an image, the Meta AI team taught the Segment Anything Model to generate a proper segmentation mask. The need for a proper mask merely implies that the output should be an appropriate mask for one of the things that the prompt might refer to (for example, a point on a shirt could represent either the shirt or the person wearing it). This task is used for model pre-training and to guide the solution of generic downstream segmentation problems.

Meta noticed that the pretraining task and interactive data collecting imposed certain limitations on the model construction. In particular, their annotators need to be able to utilize the Segment Anything Model in a browser, interactively, in real-time, on a CPU for it to be effective. Despite the fact that there must be some compromise between quality and speed to meet the runtime requirement, they discover that a straightforward approach produces satisfactory results.

On the back end, an image encoder creates a unique embedding for the image, while a lightweight encoder can instantly transform any query into an embedding vector. A lightweight decoder is then used to merge these two data sources in order to anticipate segmentation masks. After the image embedding has been calculated, SAM can respond to every query in a web browser with a segment in about 50 ms.

SAM is a useful tool for creative professionals and enthusiasts who want to edit images and videos with ease and flexibility. But first, you need to learn how to access and use it.

How to use the Segment Anything Model (SAM model)?

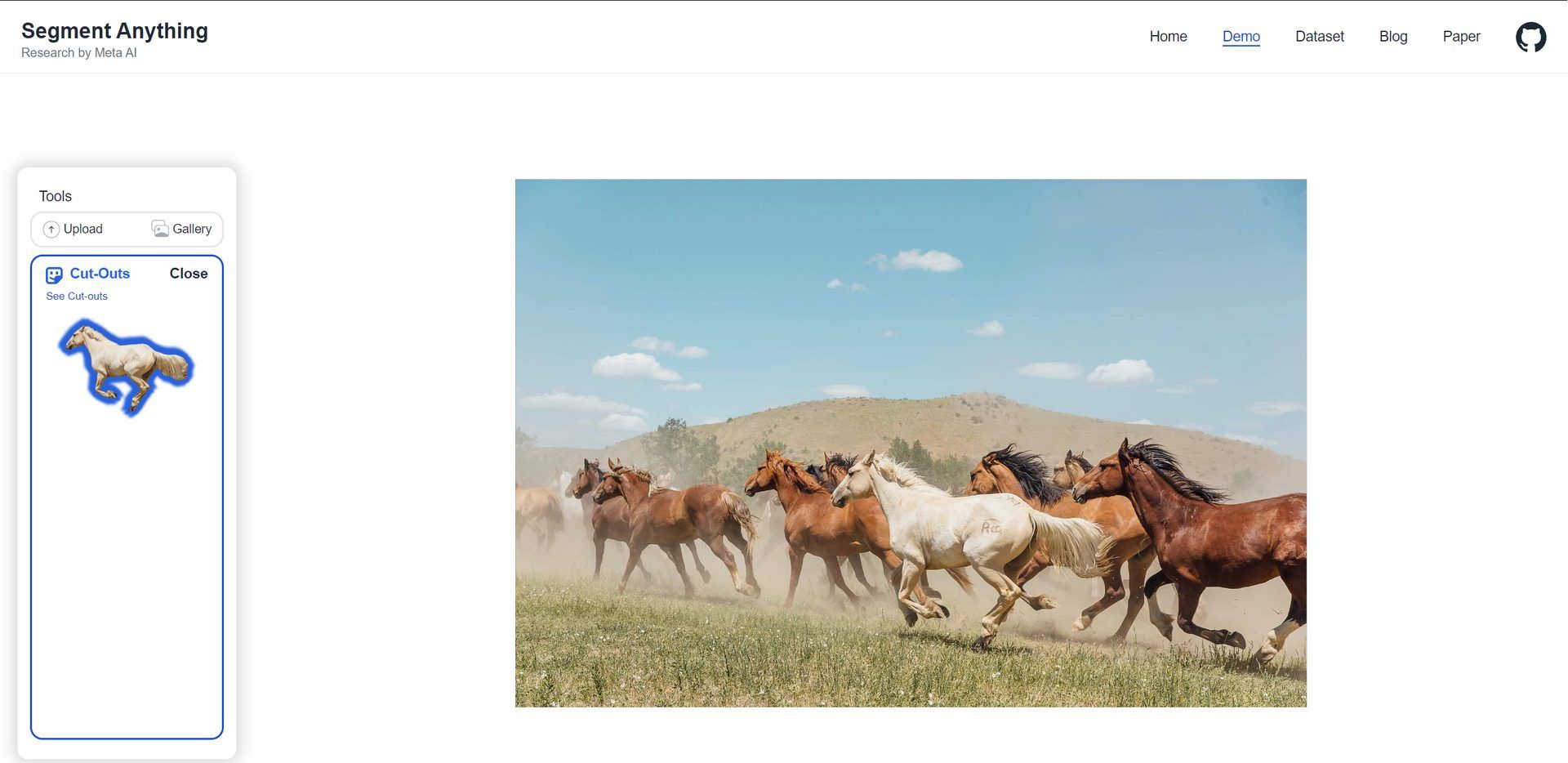

SAM is developed by Meta AI Research (formerly Facebook AI Research), and it is publicly available on GitHub. You can also try SAM online with a demo or download the dataset (SA-1B) of 1 billion masks and 11 million images. The model is quite easy to use; just follow these steps:

- Download the demo or go to the Segment Anything Model demo.

- Upload an image or choose one in the gallery.

- Add and subject areas

- Mask areas by adding points. Select Add Area, then select the object. Refine the mask by selecting Remove Area, then select the area.

Then complete your task as you want!

For more information, click here.

Image courtesy: Meta

AI 101

Are you new to AI? You can still get on the AI train! We have created a detailed AI glossary for the most commonly used artificial intelligence terms and explain the basics of artificial intelligence as well as the risks and benefits of AI. Feel free the use them. Learning how to use AI is a game changer! AI models will change the world.

AI tools we have reviewed

Almost every day, a new tool, model, or feature pops up and changes our lives, like the new OpenAI ChatGPT plugins, and we have already reviewed some of the best ones:

- Text-to-text AI tools

Do you want to learn how to use ChatGPT effectively? We have some tips and tricks for you without switching to ChatGPT Plus! When you want to use the AI tool, you can get errors like “ChatGPT is at capacity right now” and “too many requests in 1-hour try again later”. Yes, they are really annoying errors, but don’t worry; we know how to fix them. Is ChatGPT plagiarism free? It is a hard question to find a single answer. If you are afraid of plagiarism, feel free to use AI plagiarism checkers. Also, you can check other AI chatbots and AI essay writers for better results.

- Text-to-image AI tools

While there are still some debates about artificial intelligence-generated images, people are still looking for the best AI art generators. Will AI replace designers? Keep reading and find out.

- Other AI tools

Do you want more tools? Check out the best free AI art generators.