There is an exponential increase in the size of machine learning models and the carbon footprint of AI systems is an important thing to consider in order to create a sustainable world. In order to train them to accurately process images, text, or video, they need more and more energy.

Some conferences now request submissions of papers to include information on CO2 emissions as the AI community struggles with its environmental impact. A new study proposes a way for quantifying those emissions that is more precise. It also contrasts the elements that influence them and evaluates two strategies for lowering them. You can also check out the hard truths about the data sustainability amidst the big data craze, the professionals are not too optimistic for the future.

There are new tools to measure the carbon footprint of AI

The carbon footprint of AI workloads is estimated by a number of software programs. A team from Université Paris-Saclay has put a number of these technologies to the test to determine their dependability. Anne-Laure Ligozat, a co-author of that study who was not involved in the new research, adds that “And they’re not reliable in all contexts.”

According to Jesse Dodge, a research scientist at the Allen Institute for AI and the publication’s primary author, the new method is different in two ways. Dodge presented the paper last week at the ACM Conference on Fairness, Accountability, and Transparency (FAccT). First, instead of adding up the energy consumption of server chips over the time of training, it records it as a series of measurements. In addition, it correlates this usage information with a number of data points that show the regional emissions per kilowatt-hour (kWh) of energy consumed. This quantity is likewise dynamic. Dodge claims that “previous work doesn’t capture a lot of the nuance there.”

Although the new tool is more advanced than earlier versions, it still only tracks a portion of the energy required to train models. The group discovered that a server’s GPUs consumed 74% of its energy in an early experiment. The researchers concentrated on GPU use because CPUs and RAM were rarely used and could handle multiple jobs at once. Additionally, they did not calculate the energy required to construct the computer hardware, cool the data center, and transport engineers to and from the location. or the power required to run trained algorithms or collect data. However, the tool offers some suggestions on how to reduce the carbon footprint of AI while exercising.

“What I hope is that the vital first step towards a more green future and more equitable future is transparent reporting. Because you can’t improve what you can’t measure,” said Dodge.

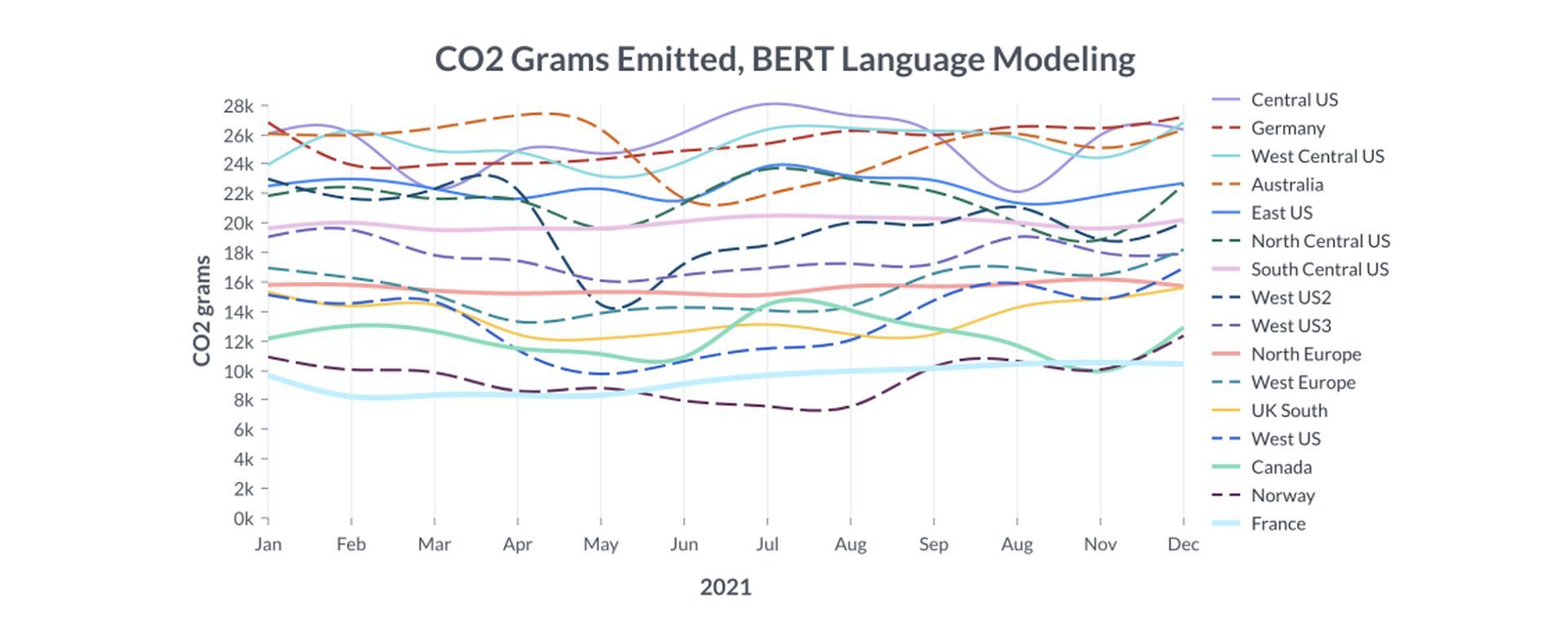

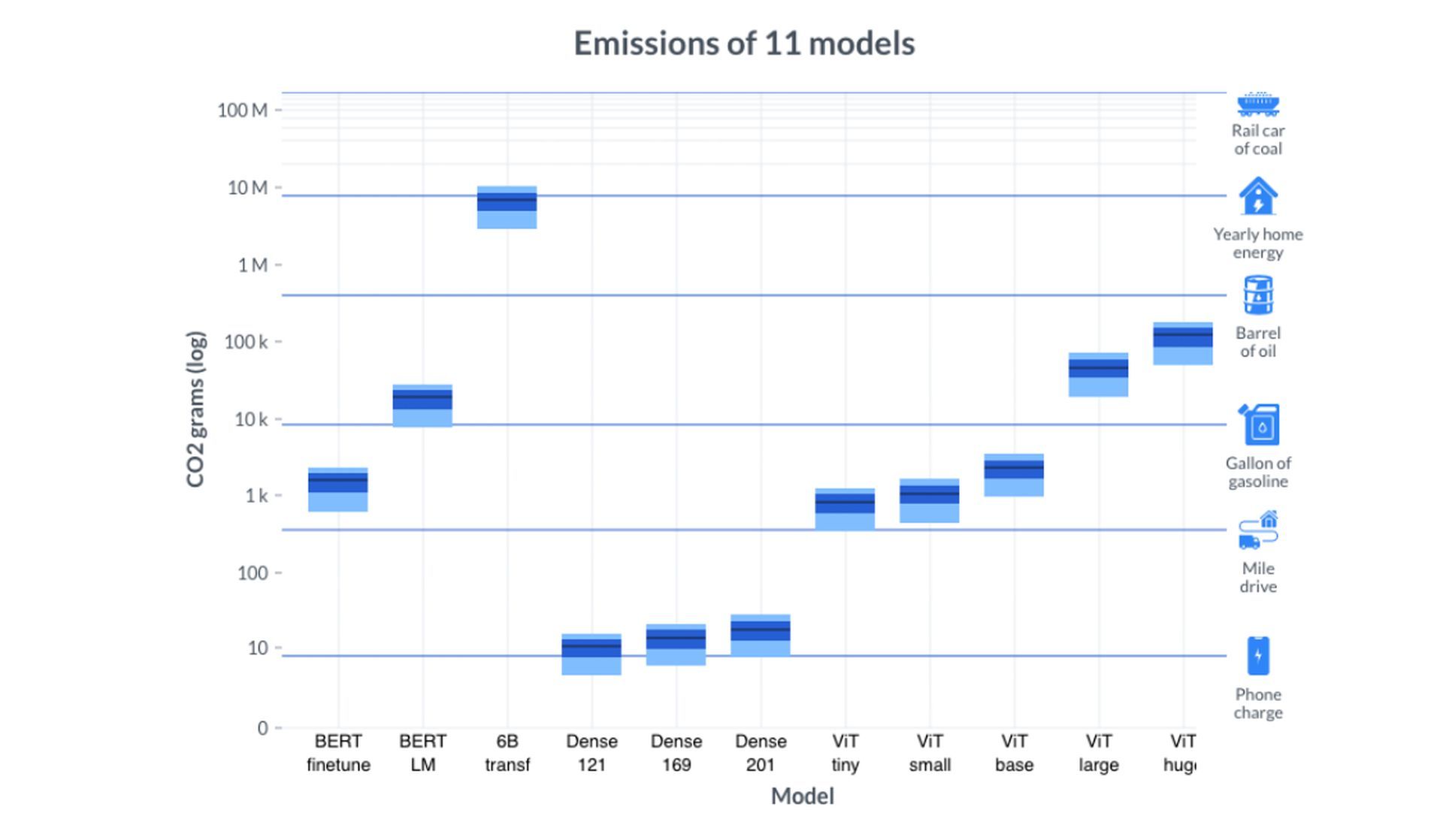

Eleven machine learning models of various sizes were trained by the researchers, in order to measure the carbon footprint of AI, to analyze words or images. From one hour of training on a single GPU to eight days on 256 GPUs. Every few seconds, they recorded the energy utilized. Additionally, they were able to get, down to the five-minute level, carbon emissions per kWh of energy utilized throughout 2020 for 16 different geographic regions. The emissions from running various models in various locations at various times might then be compared.

Similar to charging a phone, powering the GPUs to train the smallest models produced carbon emissions. The largest model, which was measured in terms of parameters, had six billion. GPUs produced almost as much carbon as they would have if they had been powering a home for a year in the US while only training it to a 13 percent completion rate. Some currently used models, such OpenAI’s GPT-3, have over 100 billion parameters.

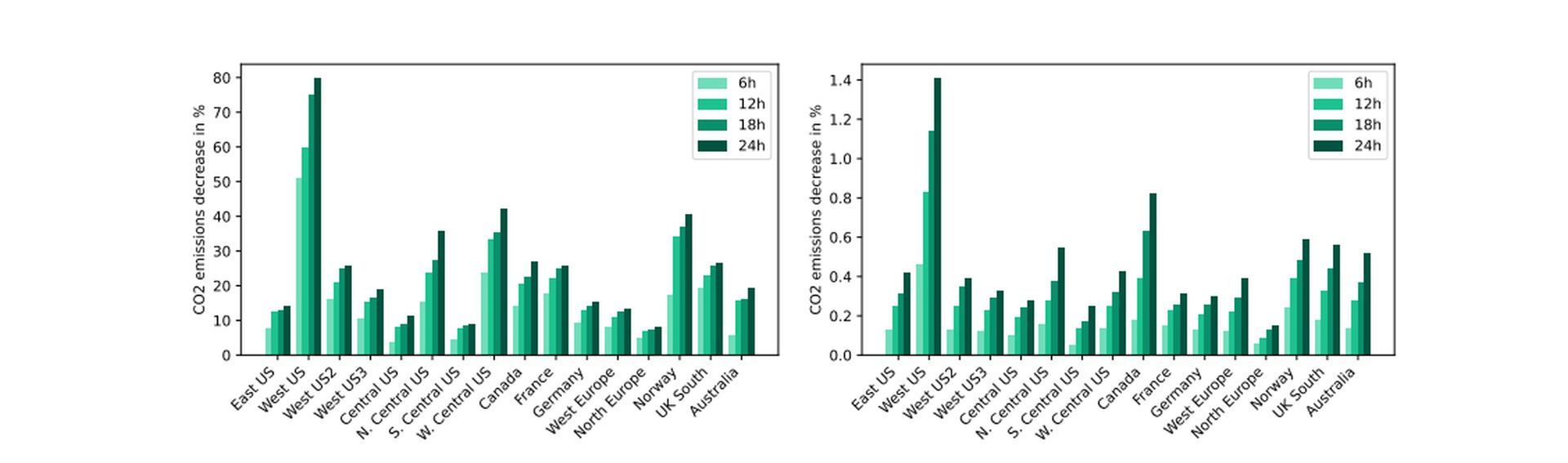

Geographical region was the main measurable factor in reducing the carbon footprint of AI, with grams of CO2 per kWh ranging from 200 to 755. By using their temporally fine-grained data, the researchers were able to try two CO2-reduction strategies in addition to moving their position. With the first option, training could be postponed for up to 24 hours. Delaying it by up to a day often decreased emissions by less than 1% for the largest model, which required several days of training, although for a much smaller model, such a delay may save 10-80%. As long as the total training duration didn’t more than double, the second option, Pause and Resume, might pause training during periods of high emissions.

The largest model profited from this strategy in half the regions, while the tiny model benefited only in a few regions (10–30%). Inadequate energy storage forces systems to occasionally rely on filthy power sources when intermittent clean sources like wind and solar can’t keep up with demand, which contributes to the fluctuating emissions per kWh over time.

These optimization strategies caught Ligozat’s attention the most throughout the report. However, they were based on old data. In the future, according to Dodge, he’d like to be able to forecast emissions per kWh so that he may put them into practice immediately. Ligozat provides an additional method to cut emissions.

“The first good practice is just to think before running an experiment. Be sure that you really need machine learning for your problem,” she said.

The GPU energy statistic has already been added to Microsoft’s Azure cloud service by the researchers that worked with them on the article. With this knowledge, users may choose to train at other times or locations, purchase carbon offsets, train a different model or no model at all.

“What I hope is that the vital first step towards a more green future and more equitable future is transparent reporting. Because you can’t improve what you can’t measure,” said Dodge. This is an important step for a more sustainable future, all professionals should be making contributions in order to reduce the carbon footprint of AI. For instance, you can check out the latest Schneider Electric research, they say the sector professionals don’t meet their IT sustainability promises.