With a more sustainable world goal, MIT researchers have succeeded in developing a new LEGO-like AI chip. Imagine a world where cellphones, smartwatches, and other wearable technologies don’t have to be put away or discarded for a new model. Instead, they could be upgraded with the newest sensors and processors that would snap into a device’s internal chip – similar to how LEGO bricks can be incorporated into an existing structure. Such reconfigurable chips might keep devices current while lowering electronic waste. This is really important because green computing is the key to a sustainable future.

This reprogrammable LEGO-like AI chip will reduce the carbon footprint

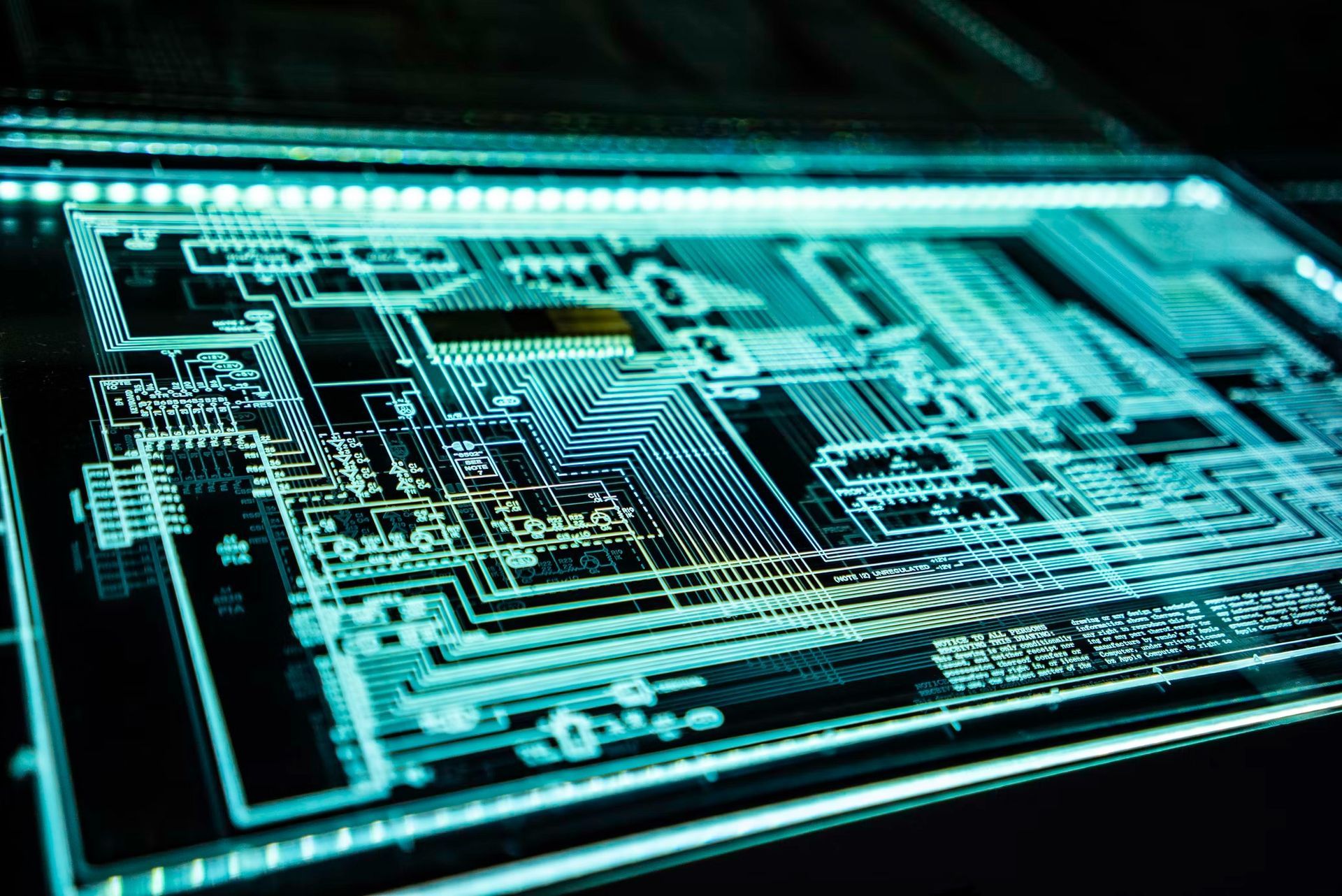

MIT engineers have developed a stackable, reprogrammable LEGO-like AI chip. The chip’s layers communicate thanks optically to alternating layers of sensing and processing components, as well as light-emitting diodes (LEDs). Other modular chip designs use conventional wiring to transmit signals between layers. Such intricate connections are difficult, if not impossible, to cut and rewire, making stackable configurations nonreconfigurable.

Rather than relying on physical wires, the MIT design uses light to transfer data across the AI chip. As a result, the chip’s layers may be swapped out or added upon, for example, to include extra sensors or more powerful processors.

“You can add as many computing layers and sensors as possible, such as light, pressure, and even smell. We call this a LEGO-like reconfigurable AI chip because it has unlimited expandability depending on the combination of layers,” said MIT postdoc Jihoon Kang.

The team is eager to apply the strategy to edge computing devices – self-sufficient sensors and other electronics that do not rely on central or distributed resources such as supercomputers or cloud-based computing.

“As we enter the era of the internet of things based on sensor networks, demand for multifunctioning edge-computing devices will expand dramatically. Our proposed hardware architecture will provide high versatility of edge computing in the future,” said Jeehwan Kim, associate professor of mechanical engineering at MIT.

The team’s findings were published in Nature Electronics. Other than the collaborators from Harvard University, Tsinghua University, Zhejiang University, and elsewhere, the MIT authors include co-first authors Chanyeol Choi, Hyunseok Kim, and Min-Kyu Song, as well as contributing authors Hanwool Yeon, Celesta Chang, Jun Min Suh, Jiho Shin, Kuangye Lu, Bo-In Park, Yeongin Kim, Sang-Hoon Bae, Hun S. Kum Han Eol Lee, Doyoon Lee, Subeen Pang, and Peng Lin.

The team’s design is now set up to execute basic picture recognition operations. It does so by utilizing a layering of photo sensors, LEDs, and processors made from artificial synapses – arrays of memory resistors, or “memristors,” that the researchers previously developed, which together function as a physical neural network, or “brain-on-a-chip.” Each array can be trained to analyze and identify signals on its semiconductor chip without needing external software or an Internet connection.

The researchers created an optical system between each sensor and artificial synapse array to enable communication between the layers of the AI chip without requiring a physical connection. They paired image sensors with artificial synapse arrays, each of which they trained to recognize particular letters—in this case, M, I, and T. Instead of using conventional wire-based transmission methods to communicate a sensor’s signals to a processor; the team built an optical network between each sensor and artificial synapse array that permitted information flow without the need for physical connections.

“Other chips are physically wired through metal, which makes them hard to rewire and redesign, so you’d need to make a new chip if you wanted to add any new function; we replaced that physical wire connection with an optical communication system, which gives us the freedom to stack and add chips the way we want,” said MIT postdoc Hyunseok Kim.

The system uses paired photodetectors and LEDs, each patterned with tiny pixels. The image sensor comprises photodetectors, which take data and deliver it to the next layer. When a signal (for instance, a letter) reaches the image sensor, the light pattern encodes what type of LED pixels are configured in response, which stimulates another layer of photodetectors, as well as an artificial synapse array that classifies the message based on how many LED pixels there are and how strong they are.

A single AI chip with a computing core of about 4 millimeters was successfully built. The AI chip is stacked with three image recognition “blocks,” each made up of an image sensor, optical communication layer, and artificial synapse array for determining one of three letters: M, I, or T.

They next illuminated a pixelated image of unknown letters onto the AI chip and recorded the electrical current produced by each neural network array in the response. (The greater the current, the more probable it is that the picture is a letter that the particular array has been trained to identify.)

The scientists discovered that the AI chip correctly identified clear pictures of each letter, but it was less accurate in determining between blurry images, such as I and T. However, they were able to replace the processing layer of the chip with a superior “denoising” processor very quickly, and the device then correctly identified photographs.

“We showed stackability, replaceability, and the ability to insert a new function into the chip,” said MIT postdoc Min-Kyu Song.

According to the researchers, the AI chip will have more sensing and processing capabilities, and the applications will be limitless. “We can add layers to a cellphone’s camera so it could recognize more complex images, or makes these into healthcare monitors that can be embedded in wearable electronic skin,” explained Choi.

Another alternative, he adds, is for modular chips integrated into gadgets that consumers can attach to refine their computing experience.

“We can make a general chip platform, and each layer could be sold separately like a video game. We could make different types of neural networks, like for image or voice recognition, and let the customer choose what they want, and add to an existing chip like a LEGO,” said Jeehwan Kim.

The study was made possible, in part, by the Ministry of Trade, Industry, and Energy (MOTIE) in South Korea, the Korea Institute of Science and Technology (KIST), and the Samsung Global Research Outreach Program. If you are interested in AI, check out the fantastic precursors of Artificial Intelligence.