Deepfakes are mixtures of real and fraudulent material forged to appear genuine. When used by malicious people, their effects can be harmful. Thus, detecting deepfakes is crucial, and researchers continuously look for new methods. The most recent techniques are frequently based on networks established using pairs of original and synthetic images, which challenges this norm by training algorithms with synthesized imagery produced uniquely. These new training data, called self-blended photos, can help algorithms designed to spot deepfake pictures and videos detect them more effectively.

The role of AI can’t be ignored when detecting deepfakes

Since the invention of recorded visual media, there have always been people on the lookout to deceive. Things range from the minor, such as phony UFO movies, to more serious issues like political leaders being erased from official photographs. Deepfakes are merely the latest manipulation technique on a long list, and their potential to pass as genuine realities is far outpacing the development of technology that detects them.

Associate Professor Toshihiko Yamasaki and graduate student, Kaede Shiohara from the University of Tokyo’s Computer Vision and Media Lab are researching flaws in artificial intelligence, among other things. They became interested in the subject of deepfakes and began investigating methods for detecting fraudulent content. Face recognition and deepfakes technologies are heavily criticized around the world. You can learn more about the latest regulations by reading the EU AI Act.

“There are many different methods to detecting deepfakes, and also various sets of training data which can be used to develop new ones. The problem is the existing detection methods tend to perform well within the bounds of a training set but less well across multiple data sets or, more crucially, when pit against state-of-the-art real-world examples. We felt the way to improve successful detections might be to rethink the way in which training data are used. This led to us developing what we call self-blended images (otherwise known as SBIs),” explained Yamasaki.

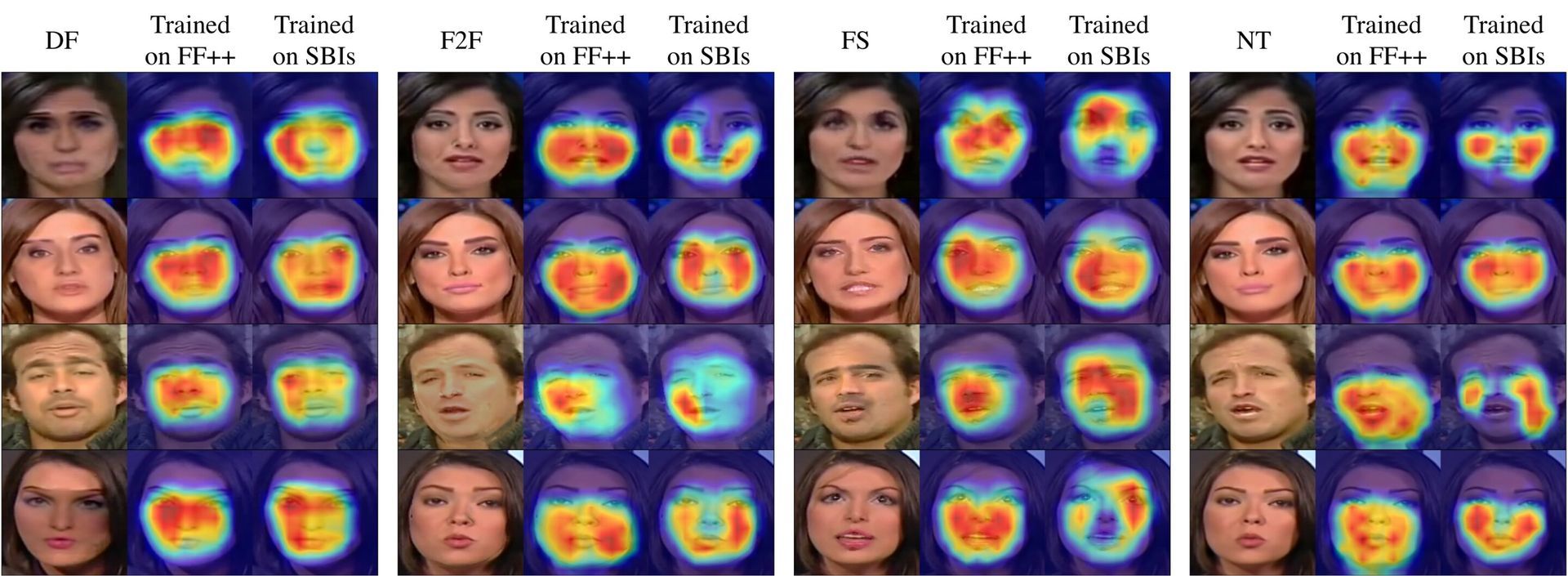

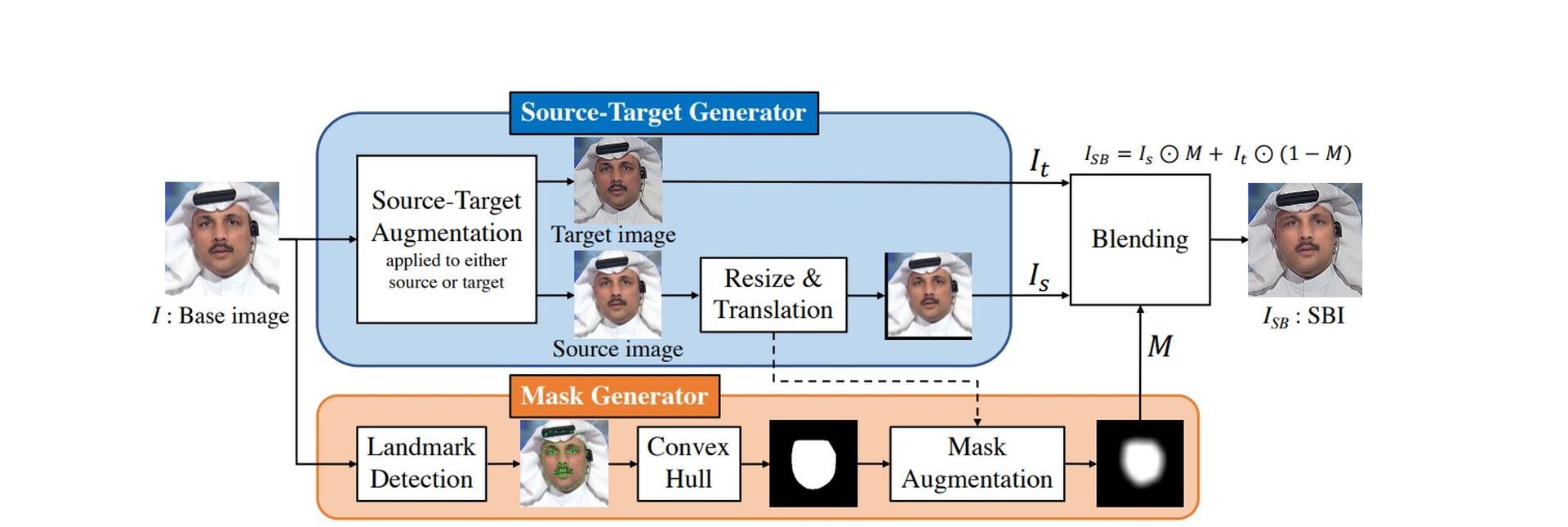

The most typical training data for detecting deepfakes are pairs of pictures, one from an unaltered source picture and the other from a counterpart faked image, where someone’s face or entire body has been replaced with that of someone else. Training on this sort of data resulted in limited detection of certain visual anomalies caused by modification while failing to detect others. They tried using training sets of synthesized pictures to grasp the problem better. They may thus better train detection algorithms to find such items by controlling what kinds of artifacts the training images included.

Detecting deepfakes is crucial, and researchers are continuously looking for new methods

“Essentially, we took clean source images of people from established data sets and introduced different subtle artifacts resulting from, for example, resizing or reshaping the image. Then we blended that image with the original unaltered source. The process of blending these images would also depend on the characteristics of the source image. A mask would be made so that only certain parts of the manipulated image would make it to the composite output. Many SBIs were compiled into our modified data set, which we then used to train detectors,” said Yamasaki.

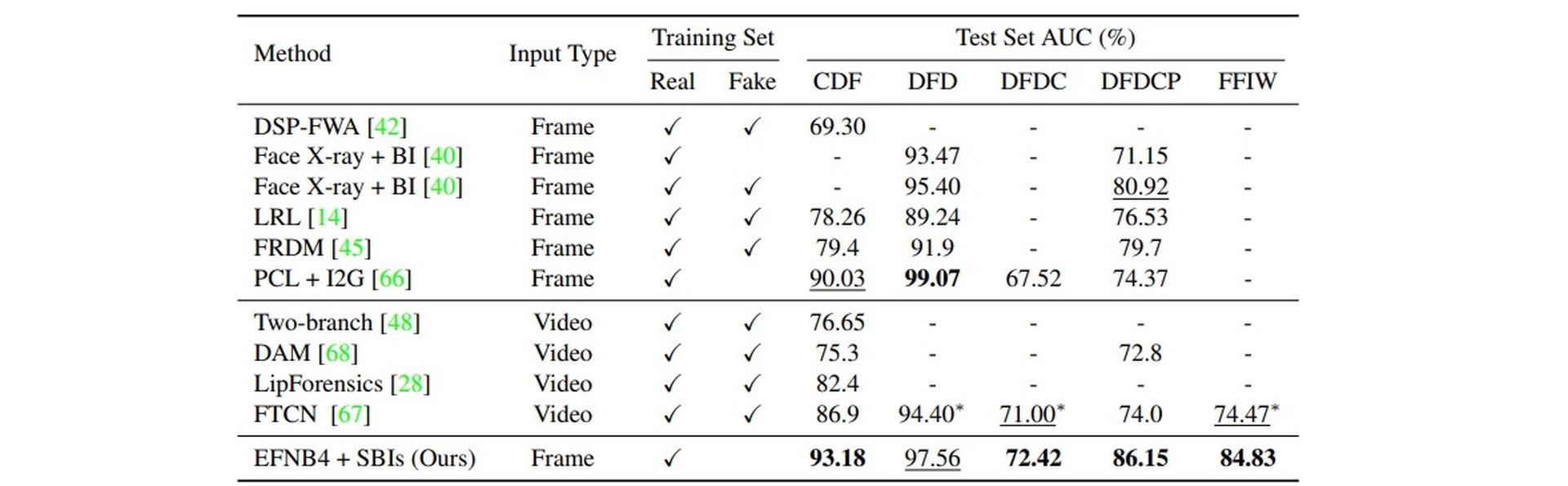

The researchers discovered that changing the data sets improved around 5–12% when detecting deepfakes, depending on the original data set they were compared to. These may not appear to be significant boosts, but they might make the difference between a hacker succeeding or failing to impact their intended audience somehow. You can also find out how does AI overcome the fundamental issues with traditional cybersecurity, by reading our article.

“Naturally, we wish to improve upon this idea. It works best on still images, but videos can have temporal artifacts we cannot yet detect. Also, deepfakes are usually only partially synthesized. We might also explore ways to detect entirely synthetic images, too. However, I envisage in the near future this kind of research might work its way onto social media platforms and other service providers so that they can better flag potentially manipulated images with some kind of warning,” said Yamasaki.