A new research collaboration between China and the US offers a way of detecting malicious ecommerce reviews designed to undermine competitors or facilitate blackmail by leveraging the signature behavior of such reviewers.

Machine learning algorithm has managed to detect PMUs

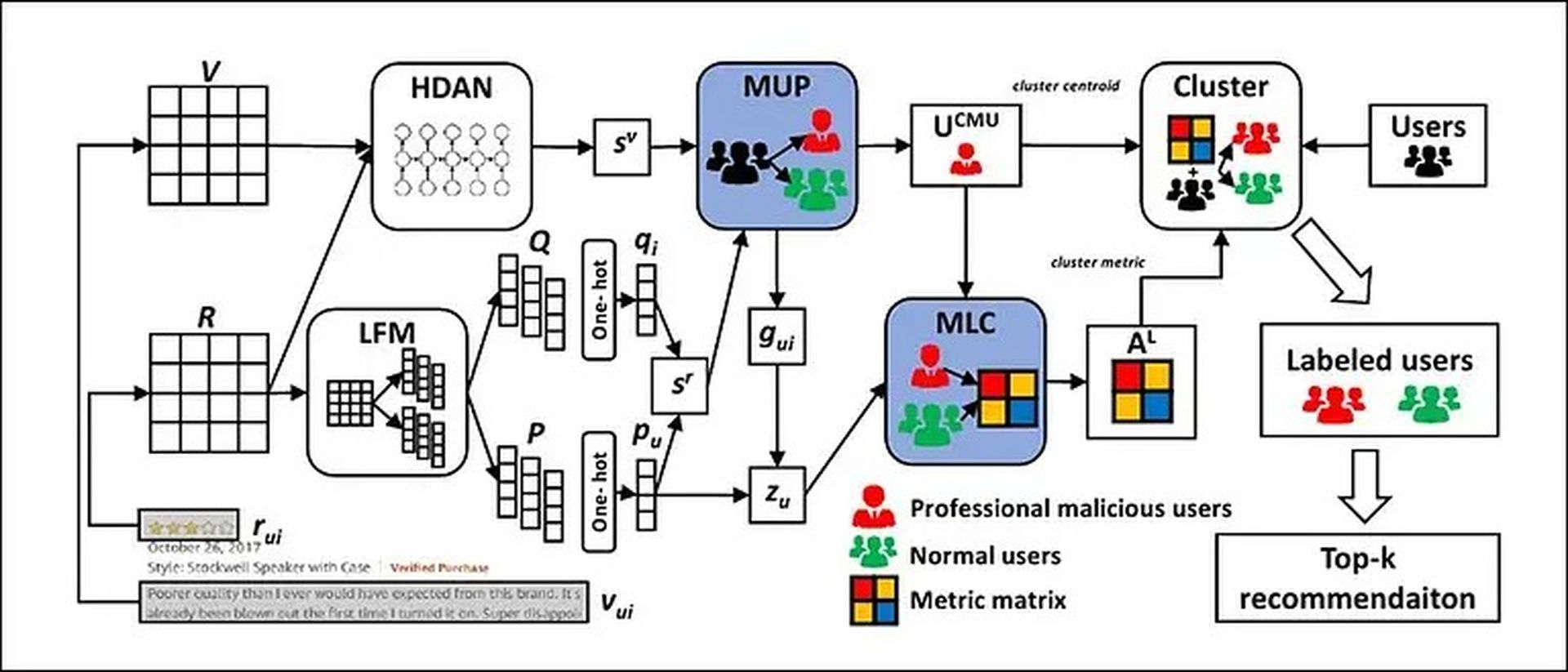

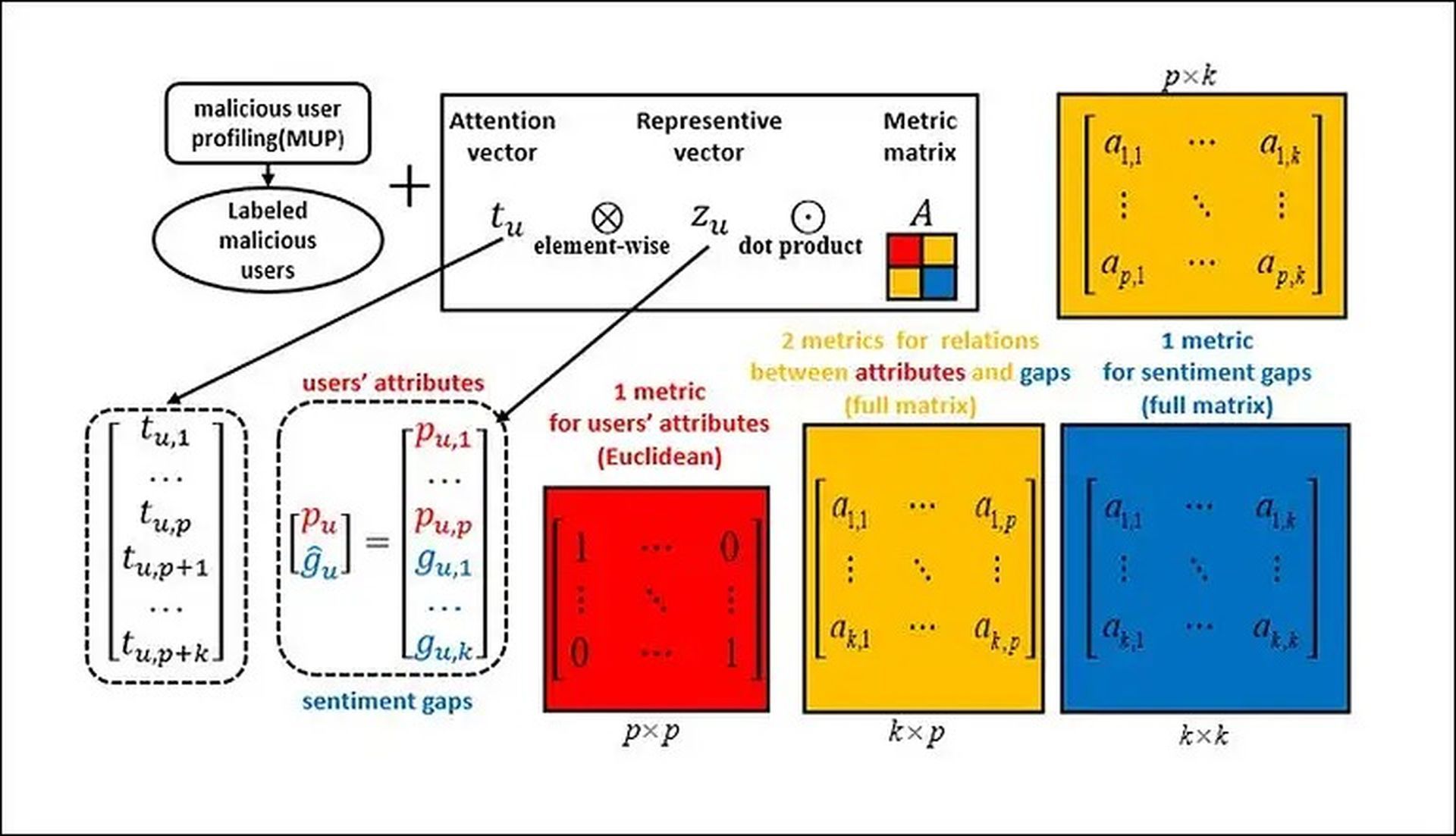

The paper describes how a system called the malicious user detection model (MMD) analyzes the output of such users to determine and label them as Professional Malicious Users (PMUs). Using Metric Learning, a method used in computer vision and recommendation systems, and a Recurrent Neural Network (RNN), the system identifies and categorizes the output of these critics.

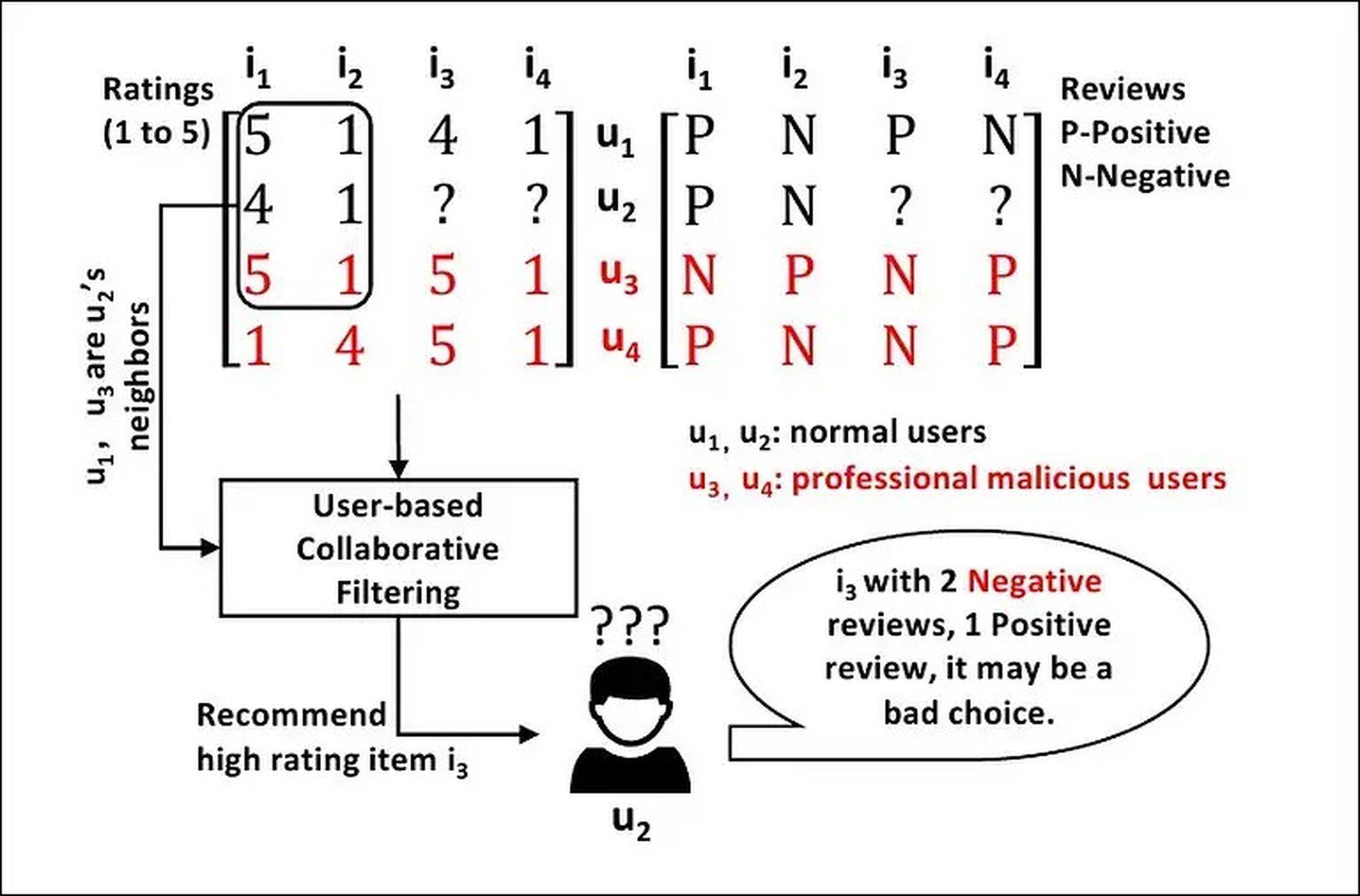

User experience may be evaluated using star ratings (or a score out of ten) and text-based comments, which usually make sense in a typical scenario. PMUs, on the other hand, frequently go against this thinking by submitting a negative text evaluation with a high rating or a poor rating with a good review.

It’s a lot more pernicious because it allows the user’s review to inflict reputational harm without setting off e-commerce sites’ rather simple filters for identifying and addressing maliciously bad comments. If an NLP filter detects invective in a review, the high star (or decimal) rating assigned by the PMU effectively cancels out the negative content, making it seem ‘neutral,’ statistically speaking.

The new study states that PMUs are often used to demand money from internet retailers in exchange for amending negative comments and a promise not to post any more bad reviews. Some individuals seeking discounts are sometimes employed by the victim’s rivals, albeit most of the time, the PMU is being unethically utilized by the victim’s competitors.

The newest variety of automated detectors for such examinations employs Content-Based Filtering or a Collaborative Filtering approach, seeking unequivocal ‘outliers’. These are dismal negative reviews in both feedback modes and differ significantly from the overall trend of review sentiment and rating.

A high posting frequency is a typical sign that such filters look for. In contrast, a PMU will post strategically but seldom since each review may be an individual commission or a component of a longer plan to obscure the ‘frequency’ statistic.

Because of this, the paper’s researchers have incorporated the unusual polarity of expert malicious comments into a separate algorithm, giving it nearly identical capabilities to a human reviewer in detecting fraudulent reviews.

Previous studies

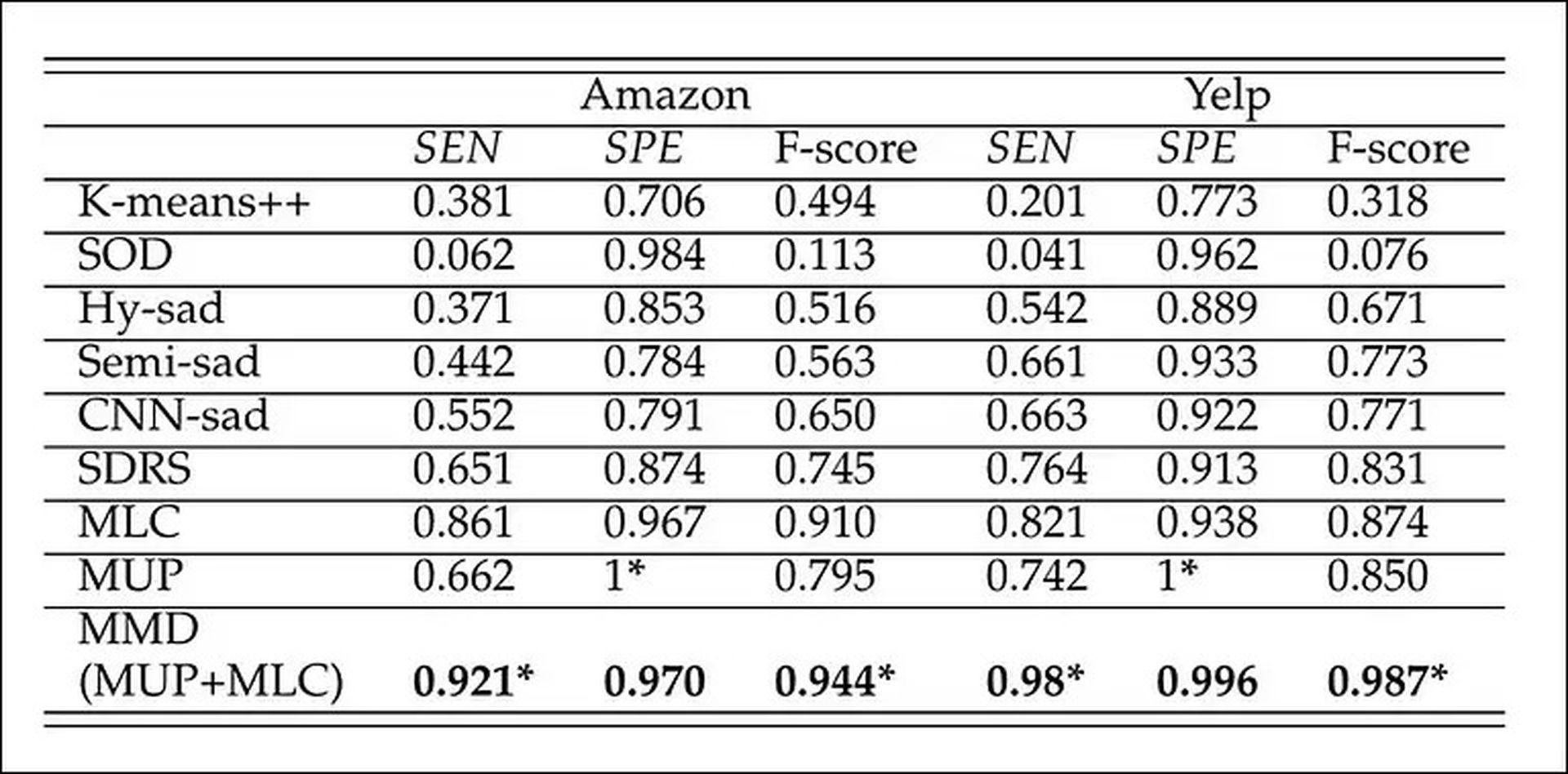

According to the authors, there are no comparable prior works to compare MMD against because it is the first technology to try to detect PMUs based on their schizophrenic posting style. As a result, the researchers compared their method against various component algorithms previously used by conventional automatic filters, including; Hysad; Semi-sad; Statistic Outlier Detection (SOD); K-means++ Clustering; CNN-sad; and Slanderous user Detection Recommender System (SDRS).

“[On] all four datasets, our proposed model MMD (MLC+MUP) outperforms all the baselines in terms of F-score. Note that MMD is a combination of MLC and MUP, which ensures its superiority over supervised and unsupervised models, ” the researchers said.

The paper further states that MMD could be used as a pre-processing method for standard automatic filtering systems, and it presents experimental results on several datasets such as User-based Collaborative Filtering (UBCF), Item-based Collaborative Filtering (IBCF), Matrix Factorization (MF-eALS), Bayesian Personalized Ranking (MF-BPR), and Neural Collaborative Filtering (NCF).

According to the article’s conclusions, the authors say that in terms of Hit Ratio (HR) and Normalized Discounted Cumulative Gain (NDCG), these investigated augmentations resulted in improved results:

“Among all four datasets, MMD improves the recommendation models in terms of HR and NDCG. Specifically, MMD can enhance the performance of HR by 28.7% on average and HDCG by 17.3% on average. By deleting professional malicious users, MMD can improve the quality of datasets. Without these professional malicious users’ fake [feedback], the dataset becomes more [intuitive].”

The paper is called Professional Malicious User Detection in Metric Learning Recommendation Systems and was published by researchers at Jilin University’s Department of Computer Science and Technology; the Key Lab of Intelligent Information Processing of China Academy of Sciences in Beijing; and Rutgers University’s School of Business.

Method

It is hard to detect PMUs because two non-equivalent parameters (a numerical-value star/decimal rating and a text-based review) must be considered. According to the new paper’s authors, no similar research has been done before.

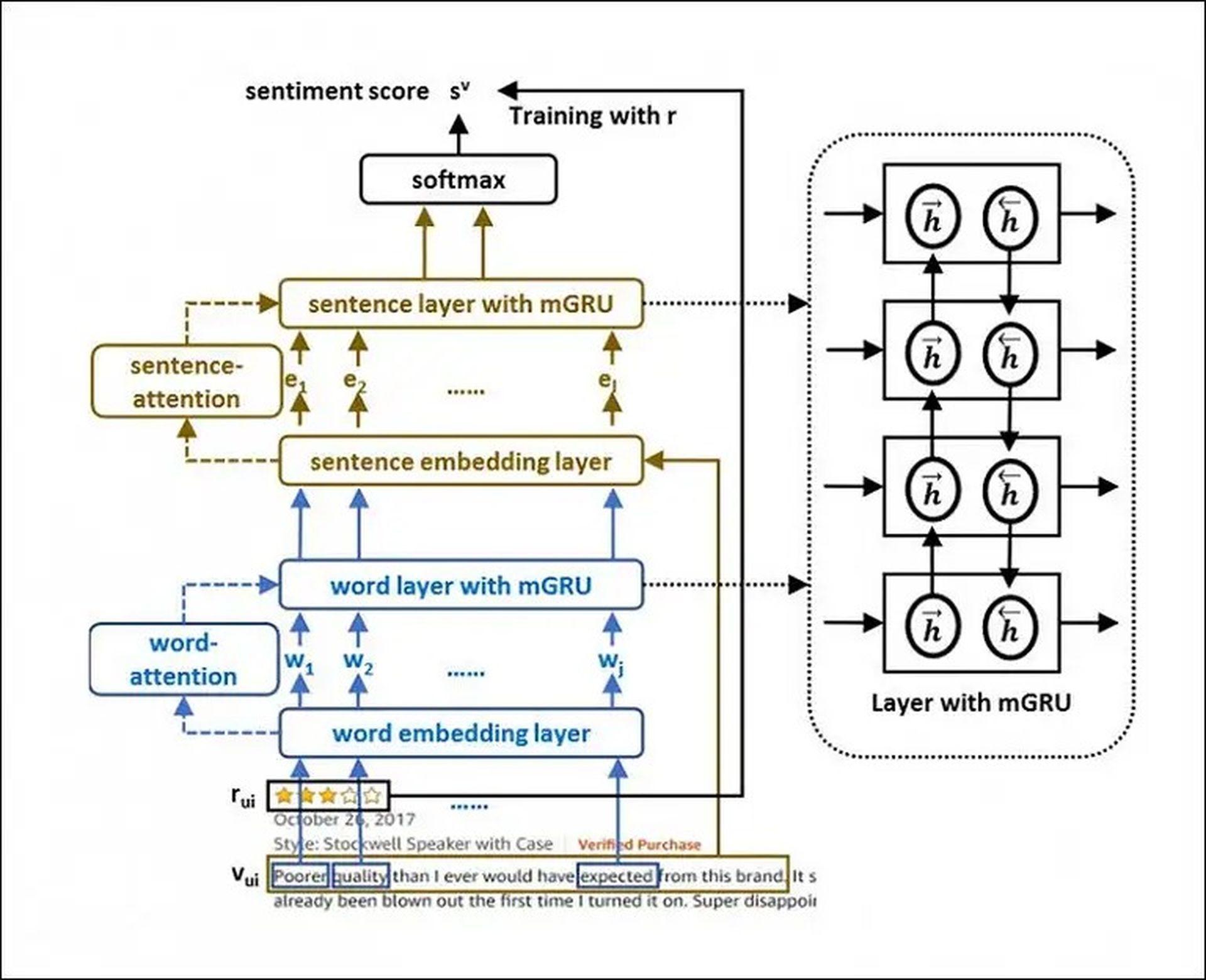

The review subject is divided into content chunks using a Hierarchical Dual-Attention recurrent Neural network (HDAN). HDAN uses the emphasis to assign weights to each word and each sentence. In the picture above, the authors state that the term “poorer” should be given greater importance than other words in the review.

The MMD algorithm uses Metric Learning to estimate an exact distance between items to characterize the entire set of connections in the data.

MMD uses a Latent Factor Model (LFM) to select the user and item, which gets a base rating score. HDAN, on the other hand, incorporates reviews into the sentiment score as supplementary information.

The MUP model generates the sentiment gap vector, which is the difference between the rating and the predicted sentiment score of the review’s text content. For the first time, it was possible to detect PMUs using this method.

The output labels are used in Metric Learning for Clustering (MLC) to establish a metric against which the probability of a user review being malicious is calculated.

The researchers also performed a user study to see how effectively the system identified malicious reviews based only on their content and star rating. The participants were asked to assign the evaluations a score of 0 (for ordinary users) or 1 (for an experienced malevolent user).

On average, the students identified 24 true positives and 24 false negatives out of a 50/50 mix of good and bad reviews. MMD was able to label 23 genuine positive users and 24 genuine negative users on average, operating almost at human levels, surpassing the task’s baseline rates.

“In essence, MMD is a generic solution that can detect the professional malicious users that are explored in this paper and serve as a general foundation for malicious user detections. With more data, such as image, video, or sound, the idea of MMD can be instructive to detect the sentiment gap between their title and content, which has a bright future to counter different masking strategies in different applications,” the authors explained. If you are into ML systems, check out the history of Machine Learning, it dates back to the 17th century.