The concept of data ingestion has existed for quite some time, but remains a challenge for many enterprises trying to get necessary data in and out of various dependent systems. The challenge becomes even more unique when looking to ingest data in and out of Hadoop. Hadoop ingestion requires processing power and unique specifications that cannot be met by one solution on the market now.

Given the variety of data sources that need to pump data into Hadoop, customers often end up creating one-off data ingestion jobs. These one-off jobs copy files using FTP & NFS mounts or try to use standalone tools like ‘DistCP.’ Since these jobs stitch together multiple tools, they encounter problems around manageability, failure recovery and ability to scale to handle data skews.

So how do enterprises design an ingestion platform that not only addresses the scale needed today but also scales out to address the needs of tomorrow? Our solution is DataTorrent dtIngest, the industry’s first unified stream and batch data ingestion application for Hadoop.

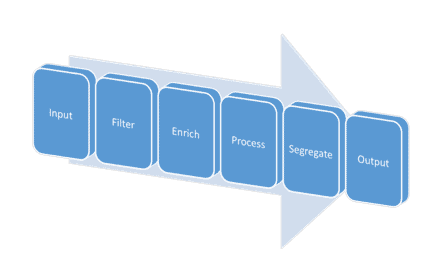

At DataTorrent, we work with some of the world’s largest enterprises, including leaders in IoT and ad tech. These enterprises must ingest massive amounts of data with minimal latency from a variety of sources, and dtIngest enables them to establish a common pattern for ingestion across various domains. Take a look at the following diagram, for example.

Each of the blocks signifies a specific stage in the ingestion process:

- Input – Discover and fetch the data for ingestion. The discovery of data may be from File System, messaging queues, web services, sensors, databases or even the outputs of other ingestion apps.

- Filter – Analyze the raw data and identify the interesting subset. The filter stage is typically used for quality control or to simply sample the dataset or parse the data.

- Enrich – Plug in the missing pieces in the data. This stage often involves talking to external data sources to plug in the missing data attributes. Sometimes this may mean that the data is being transformed from a specific form into a form more suitable for downstream processes.

- Process – This stage is meant to do some lightweight processing to either further enrich the event or transform the event from one form into another. While similar to the enrich stage in that it requires employing external systems, the process stage usually computes using the existing attributes of the data.

- Segregate – Often times before the data is given to downstream systems, it makes sense to bundle similar data sets together. While this stage may not always be necessary for compaction, segregation does make sense most of the time.

- Output – With Project Apex, outputs are almost always mirrors of inputs in terms of what they can do and are as essential as inputs. While the input stage requires fetching the data, the output stage requires resting the data – either on durable storage systems or other processing systems.

There are many different ways in which these stages can occur, and the order, or even number of instances required, are dependent on the specific ingestion application.

DataTorrent dtIngest app, for example, simplifies the collection, aggregation and movement of large amounts of data to and from Hadoop for a more efficient data processing pipeline. The app was built for enterprise data stewards and intends to make their job of configuring and running Hadoop data ingestion and data distribution pipelines a point-and-click process.

Some sample use cases of dtIngest include:

- Bulk or incremental data loading of large and small files into Hadoop

- Distributing cleansed/normalized data from Hadoop

- Ingesting change data from Kafka/JMS into Hadoop

- Selectively replicating data from one Hadoop cluster to another

- Ingest streaming event data into Hadoop

- Replaying log data stored in HDFS as Kafka/JMS streams

Future additions to dtIngest will include new sources and integration with data governance.

(image credit: Kevin Steinhardt)